Latency

In this tutorial, we are going to discuss about latency, one of the important system design concept. Latency is another important metric related to the performance of a system.

Imagine a pipe with water flowing through it. The speed at which water flows through the pipe may vary i.e. sometimes flowing very fast and sometimes flowing very slow. This concept is similar to latency in system design. Latency determines the speed at which data can be transferred from one end of a system to another.

In this tutorial, we will focus on the concept of latency and how it affects the performance of a system. We will also discuss measures that can be taken to improve the latency.

What is Latency?

Latency refers to the time it takes for a request to travel from its point of origin to its destination and receive a response. It is directly related to performance of the system: Lower latency means better performance. Let’s understand this from another perspective!

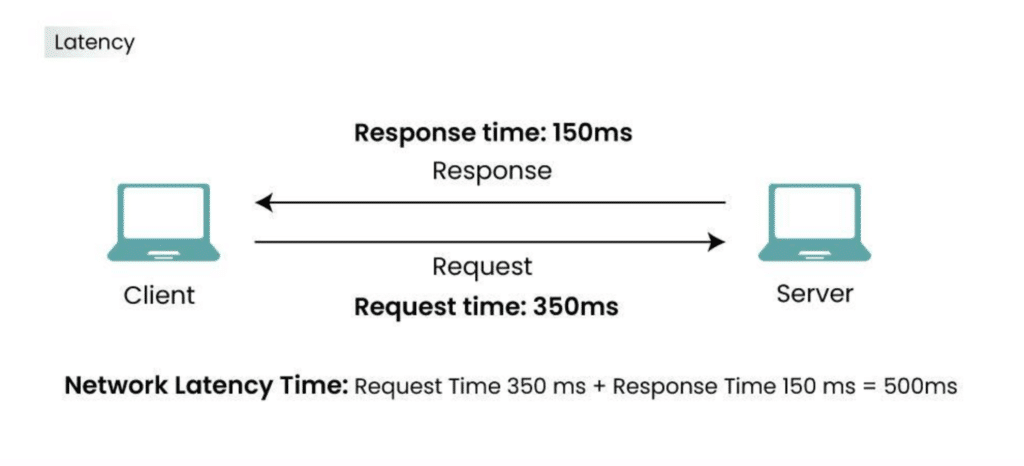

Browser (client) sends a signal to the server whenever a request is made. Servers process the request, retrieve the information and send it back to the client. So, latency is the time interval between start of a request from the client end to delivering the result back to the client by the server i.e. the round trip time between client and server.

Our ultimate goal would be to develop a latency-free system, but various bottlenecks prevent us in developing such an ideal system. The core idea is simple: We can try to minimize it. Lower the system latency, the less time it takes to process our requests.

How does latency work?

Latency is nothing other than the estimated time the client has to wait after starting the request to receive the result. Let’s take an example and look into how it works.

Suppose you interact with an e-commerce website, you liked something, and added it to the cart. Now when you press the “Add to Cart” button, following events will happen:

- The instant “Add to Cart” button is pressed, the clock for latency starts and browser initiate a request to the server.

- Server acknowledges the request and processes it.

- Server response to the request, the response reaches your browser, and product gets added to your Cart.

You can start the stopwatch in the first step and stop the stopwatch in the last step. This difference in time would be the latency.

The working of Latency can be understood by two ways:

- Network Latency

- System Latency

What is Network Latency?

Network Latency is a type of Latency in system design, it refers to the time it takes for data to travel from one point in a network to another.

We can take the example of email, think of it as the delay between hitting send on an email and the recipient actually receiving it. Just like overall latency, it’s measured in milliseconds or even microseconds for real time application.

What is System Latency?

System latency refers to the overall time it takes for a request to go from its origin in the system to its destination and receive a response. Think of Latency as the “wait time” in a system.

The time between clicking and seeing the updated webpage is the system latency. It includes processing time on both client and server, network transfers, and rendering delays.

How does High Latency occur?

High latency occurs when there is a delay in the transmission of data between two points in a network. Latency is the time it takes for data to travel from the source to the destination. Several factors contribute to high latency:

- Physical Distance: The physical distance between the source and destination can introduce latency. Electromagnetic signals, such as those used in network communication, have a finite speed, and as the distance increases, the time it takes for signals to travel also increases.

- Network Congestion: High levels of network traffic or congestion can lead to delays. When the network is overloaded with data, packets may experience queuing delays at routers and switches, resulting in increased latency.

- Router and Switch Processing: The processing time at network devices, such as routers and switches, can contribute to latency. These devices need time to inspect, forward, and route packets, and delays can occur if they are overloaded or have high utilization.

- Inefficient Network Infrastructure: Outdated equipment, overloaded cables, and inefficient routing protocols can contribute to slower data transfer.

- Wireless Interference: Signal interference in Wi-Fi networks can cause delays and packet loss, impacting latency.

- Slow Hardware: Processors, storage devices, and network cards with limited processing power can bottleneck performance and increase latency.

- Software Inefficiency: Unoptimized code, inefficient algorithms, and unnecessary background processes can slow down system responsiveness.

- Database Access: Complex database queries or overloaded databases can take longer to process and generate responses, affecting system latency.

- Resource Competition: When multiple applications or users share resources like CPU or memory, they can introduce delays waiting for their turn, increasing overall latency.

Reducing latency is crucial for applications that require real-time interactions, such as online gaming, video conferencing, and financial transactions. Minimizing the impact of these latency-inducing factors is achieved through optimizing network infrastructure, using efficient protocols, and employing techniques like caching and content delivery networks (CDNs).

How to measure Latency?

Measuring latency involves determining the time it takes for data to travel between two points in a network or system. There are various tools and methods available for measuring latency, and the choice of method depends on the specific requirements and context. Here are some common ways to measure latency:

Ping: The ping command is a widely used tool for measuring round-trip latency between two devices. In a command prompt or terminal, you can use the following syntax:

ping <destination>The output typically includes information about the round-trip time (RTT) in milliseconds.

raceroute/Tracepath: traceroute (on Unix-like systems) or tracert (on Windows) allows you to trace the route that packets take from your device to a destination. It provides information about the latency at each hop along the path.

traceroute <destination>MTR (traceroute with ping): MTR is a combination of both ping and Traceroute. MTR gives a list of reports on how each hop in a network is required for a packet to travel from one end to the other. The report generally includes details such as percentage Loss, Average Latency, etc.

Network monitoring tools: Dedicated network monitoring tools like Wireshark, Nagios, or PRTG can capture and analyze network traffic, including latency. These tools often provide detailed insights into network performance and can help identify latency issues.

Application Performance Monitoring (APM) Tools: APM tools, such as OpsRamp, New Relic, AppDynamics, or Dynatrace, focus on monitoring the performance of applications. They can provide metrics on response times, transaction times, and other performance-related data.

Latency Benchmarking Tools: Tools like iperf or latencytest can be used to generate controlled network traffic and measure latency. These tools allow you to simulate specific network conditions and observe the resulting latency.

Web-Based Tools: There are online services and websites that offer latency testing for specific regions. These tools often use a network of servers located in different geographical locations to measure latency from various points around the world.

Round-Trip Time (RTT) Measurements: In programming or scripting, you can use techniques like timestamping to measure the round-trip time for a packet. This involves sending a packet with a timestamp, having the recipient note the time upon receipt, and calculating the RTT.

When measuring latency, it’s important to consider the type of communication being assessed (e.g., TCP or UDP), the network path, and the specific requirements of the application or service. Additionally, multiple measurements over time can provide a more accurate picture of latency variability.

Latency optimization

Optimizing latency is crucial for improving the responsiveness of applications and services, especially in real-time or interactive scenarios. Here are several strategies for latency optimization:

Content Delivery Networks (CDNs):

CDN stores resources in multiple locations worldwide and reduces the request and response travel time. So instead of going back to the origin server, request can be fetched using the cached resources closer to the clients.

Caching: Implement caching mechanisms to store frequently accessed data closer to the point of use. This reduces the need to retrieve data from the original source, speeding up response times.

Optimized Protocols: Choose communication protocols that are optimized for low latency. For example, consider using UDP for real-time applications, as it has lower overhead compared to TCP.

HTTP/2: We can reduce latency by the use of HTTP/2. It allows parallelized transfers and minimizes round trips from the sender to the receiver.

Less external HTTP requests: Latency increases because of third-party services. By reducing number of external HTTP requests, system latency gets optimized as third-party services affect speed of the application.

Browser caching: Browser caching can also help to reduce the latency by caching specific resources locally to decrease the number of requests made to the server.

Disk I/O: Instead of often writing to disk, we can use write-through caches or in-memory databases or combine writes where possible or use fast storage systems, such as SSDs.

Connection Pooling: Use connection pooling to reuse existing connections instead of establishing new ones for each request. This reduces the overhead of creating and closing connections, improving response times.

Load Balancing: Distribute incoming traffic across multiple servers using load balancers. This not only enhances scalability but also ensures that no single server is overwhelmed, reducing latency.

Parallel Processing: Leverage parallel processing to perform multiple tasks simultaneously. This is particularly beneficial in scenarios where tasks can be executed independently, such as making parallel database queries.

Asynchronous Processing: Implement asynchronous processing for tasks that do not require immediate responses. This allows the system to continue processing other tasks while waiting for non-blocking operations to complete.

Compression: Compress data before transmission to reduce the amount of data sent over the network. This is especially useful for large payloads, minimizing transmission times.

Minimize Round-Trips: Reduce the number of round-trips between client and server. This can be achieved by optimizing API design, combining multiple requests into a single one (batching), and reducing unnecessary back-and-forth communication.

Optimized Database Queries: Optimize database queries to retrieve only the necessary data. Indexing, proper query design, and database performance tuning can significantly reduce database-related latency.

Monitoring and Profiling: Regularly monitor and profile your application to identify performance bottlenecks. This can involve using profiling tools and analyzing metrics to pinpoint areas that need optimization.

Reduce Payload Size: Minimize the size of data transmitted over the network by using efficient data formats (e.g., JSON instead of XML) and removing unnecessary information.

Remember that the specific strategies you choose will depend on the nature of your application, the architecture, and the specific use cases. Regular testing and performance monitoring are essential to evaluate the impact of optimizations and identify areas for further improvement.

That’s all about latency in system design. If you have any queries or feedback, please write us at contact@waytoeasylearn.com. Enjoy learning, Enjoy system design..!!