Caching

In this tutorial, we are going to discuss about caching, another essential system design concept. Have you ever experienced a website taking longer time to load on the first visit but significantly faster on subsequent visits? This is related to caching: An idea to improve the performance of distributed systems. In this tutorial, we will discuss the caching concept, how it works, and what are the important types of caching.

Caching is an essential technique used in software engineering to improve system performance and user experience. It works by temporarily storing frequently accessed data in a cache, which is faster to access than the original source of the data.

As a software engineer, it’s essential to have a solid understanding of caching and how it works in different types of systems. In this blog, we’ll cover everything you need to know about caching, from its definition and importance to the different types of caching and best practices for implementation.

What is Caching?

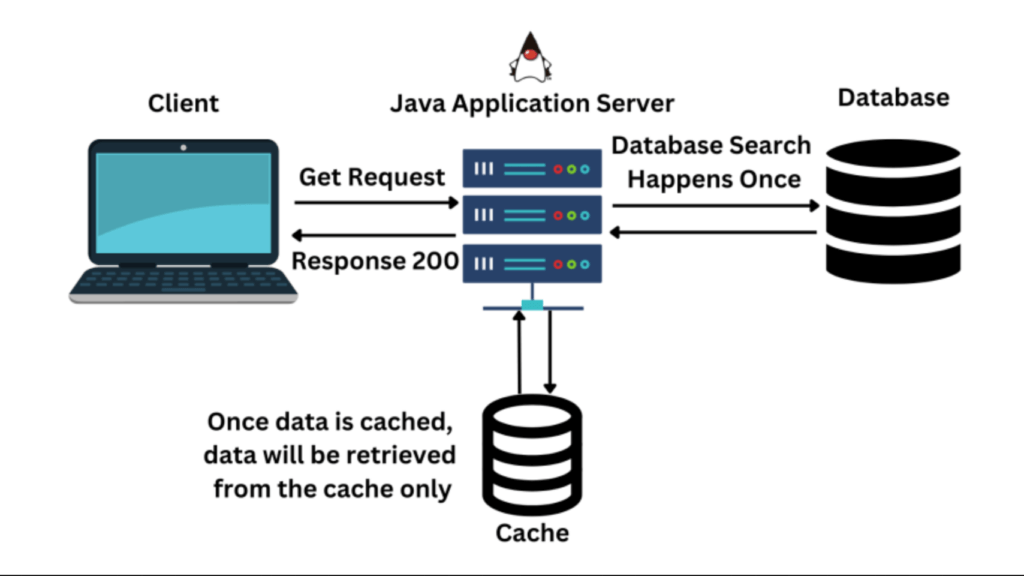

Caching is a process of storing data in a temporary storage location called cache to improve the speed of data access. Now the question is: What is cache?

The cache is a high-speed storage layer that sits between the application and the original source of the data, such as a database, a file system, or a remote web service.

When data is requested by the application, it is first checked in the cache. If the data is found in the cache, it is returned to the application. If the data is not found in the cache, it is retrieved from its original source, stored in the cache for future use, and returned to the application.

Caching can be used for various types of data, such as web pages, database queries, API responses, images, and videos. The goal of caching is to reduce the number of times data needs to be fetched from its original source, which can result in faster processing and reduced latency.

Caching can be implemented in various ways, including in-memory caching, disk caching, database caching, and CDN caching.

In-memory caching stores data in the main memory of the computer, which is faster to access than disk storage. Disk caching stores data on the hard disk, which is slower than main memory but faster than retrieving data from a remote source. Database caching stores frequently accessed data in the database itself, reducing the need to access external storage. CDN caching stores data on a distributed network of servers, reducing the latency of accessing data from remote locations.

How Caching Works?

Now let’s understand how caching works in practice when a user requests some data.

1. The system will first check the cache memory to see if the requested data is already there. If data is present (cache hit) ), the system will return the data directly from the cache. As mentioned earlier, cache access is much faster compared to the main database access.

2. If requested data is not present (cache miss) in the cache, the system will retrieve the data from the original database. As discussed earlier, this process is slower compared to the cache access.

3. Now, after fetching data from the database, the system will store the fetched data in the cache for future use, so that subsequent requests for the same data can be served more quickly.

The above idea is one of the commonly used caching strategies: cache-aside strategy. . Here cache and database are independent and it is the responsibility of the application code to manage operations on cache and database. This is useful when we are dealing with read-heavy systems.

There can be other types of caching strategies like read-through strategy, write-around strategy, write-back strategy and write-through strategy. We will discuss these concepts later in this tutorial.

Note: Cache hits and misses are critical metrics for measuring cache performance.

- A high cache hit rate means the cache is effectively storing and retrieving the data.

- On the other hand, a high cache miss rate implies that the cache is not used effectively, which can result in slower performance. In this situation, we can think to adjust cache size or use a different replacement policy, or some other techniques to minimize cache miss.

Why is Caching Important?

Caching plays a critical role in improving system performance and user experience in software engineering. By storing frequently accessed data in a cache, applications can reduce the response time and latency of operations, resulting in faster and more efficient processing. Here are some reasons why caching is important:

1. Improved system performance: Caching can significantly improve the performance of an application by reducing the number of times data needs to be fetched from its original source. Since cached data can be retrieved faster than from the original source, this results in a significant reduction in processing time, which leads to a more responsive application.

2. Reduced network load: Caching can also reduce network load by minimizing the amount of data that needs to be transmitted over the network. Since cached data is stored locally, there is no need to fetch data from the original source, reducing the amount of data that needs to be transferred over the network.

3. Increased scalability: Caching can improve the scalability of an application by reducing the load on the original source. By storing frequently accessed data in a cache, the original source is less likely to be overwhelmed with requests, making it more scalable.

4. Better user experience: Faster response times and reduced latency can lead to a better user experience. Applications that load quickly and respond to user requests in a timely manner are more likely to be used and preferred by users.

Types of Caching

Caching can be implemented in various ways, depending on the specific use case and the type of data being cached. Here are some of the most common types of caching:

1. In-memory caching

In-memory caching stores data in the main memory of the computer, which is faster to access than disk storage. In-memory caching is useful for frequently accessed data that can fit into the available memory. This type of caching is commonly used for caching API responses, session data, and web page fragments. To implement in-memory caching, software engineers can use various techniques, including using a cache library like Memcached or Redis, or implementing custom caching logic within the application code.

2. Disk caching

Disk caching stores data on the hard disk, which is slower than main memory but faster than retrieving data from a remote source. Disk caching is useful for data that is too large to fit in memory or for data that needs to persist between application restarts. This type of caching is commonly used for caching database queries and file system data.

3. Database caching

Database caching stores frequently accessed data in the database itself, reducing the need to access external storage. This type of caching is useful for data that is stored in a database and frequently accessed by multiple users. Database caching can be implemented using a variety of techniques, including database query caching and result set caching.

4. CDN caching

CDN caching stores data on a distributed network of servers, reducing the latency of accessing data from remote locations. This type of caching is useful for data that is accessed from multiple locations around the world, such as images, videos, and other static assets. CDN caching is commonly used for content delivery networks and large-scale web applications.

5. DNS caching

DNS cache is a type of cache used in the Domain Name System (DNS) to store the results of DNS queries for a period of time. When a user requests to access a website, their computer sends a DNS query to a DNS server to resolve the website’s domain name to an IP address. The DNS server responds with the IP address, and the user’s computer can then access the website using the IP address.

DNS caching improves the performance of the DNS system by reducing the number of requests made to DNS servers. When a DNS server receives a request for a domain name, it checks its local cache to see if it has the IP address for that domain name. If the IP address is in the cache, the DNS server can immediately respond with the IP address without having to query other servers. This can significantly reduce the response time for DNS queries and improve the overall performance of the system.

Cache Replacement Policies

When implementing caching, it’s important to have a cache replacement policy to determine which items in the cache should be removed when the cache becomes full. Here are some of the most common cache replacement policies:

- Least Recently Used (LRU): LRU is a cache replacement policy that removes the least recently used item from the cache when it becomes full. This policy assumes that items that have been accessed more recently are more likely to be accessed again in the future.

- Least Frequently Used (LFU): LFU is a cache replacement policy that removes the least frequently used item from the cache when it becomes full. This policy assumes that items that have been accessed more frequently are more likely to be accessed again in the future.

- First In, First Out (FIFO): FIFO is a cache replacement policy that removes the oldest item from the cache when it becomes full. This policy assumes that the oldest items in the cache are the least likely to be accessed again in the future.

- Random Replacement: Random replacement is a cache replacement policy that removes a random item from the cache when it becomes full. This policy doesn’t make any assumptions about the likelihood of future access and can be useful when the access pattern is unpredictable.

Comparison of different replacement policies

Each cache replacement policy has its advantages and disadvantages, and the best policy to use depends on the specific use case. LRU and LFU are generally more effective than FIFO and random replacement since they take into account the access pattern of the cache.

However, LRU and LFU can be more expensive to implement since they require maintaining additional data structures to track access patterns. FIFO and random replacement are simpler to implement but may not be as effective in optimizing cache performance. Overall, the cache replacement policy used should be chosen carefully to balance the trade-off between performance and complexity.

Cache Invalidation Strategies

Cache invalidation is the process of removing data from the cache when it is no longer valid. Invalidating the cache is essential to ensure that the data stored in the cache is accurate and up-to-date. Here are some of the most common cache invalidation strategies:

1. Write-through cache

Under this scheme, data is written into the cache and the corresponding database simultaneously. The cached data allows for fast retrieval and, since the same data gets written in the permanent storage, we will have complete data consistency between the cache and the storage. Also, this scheme ensures that nothing will get lost in case of a crash, power failure, or other system disruptions.

Although, write-through minimizes the risk of data loss, since every write operation must be done twice before returning success to the client, this scheme has the disadvantage of higher latency for write operations.

2. Write-around cache

This technique is similar to write-through cache, but data is written directly to permanent storage, bypassing the cache. This can reduce the cache being flooded with write operations that will not subsequently be re-read, but has the disadvantage that a read request for recently written data will create a “cache miss” and must be read from slower back-end storage and experience higher latency.

3. Write-back cache

Under this scheme, data is written to cache alone, and completion is immediately confirmed to the client. The write to the permanent storage is done based on certain conditions, for example, when the system needs some free space. This results in low-latency and high-throughput for write-intensive applications; however, this speed comes with the risk of data loss in case of a crash or other adverse event because the only copy of the written data is in the cache.

4. Write-behind cache

It is quite similar to write-back cache. In this scheme, data is written to the cache and acknowledged to the application immediately, but it is not immediately written to the permanent storage. Instead, the write operation is deferred, and the data is eventually written to the permanent storage at a later time. The main difference between write-back cache and write-behind cache is when the data is written to the permanent storage. In write-back caching, data is only written to the permanent storage when it is necessary for the cache to free up space, while in write-behind caching, data is written to the permanent storage at specified intervals.

Overall, the cache invalidation strategy used should be chosen carefully to balance the trade-off between performance and data accuracy. By understanding the different cache invalidation strategies available, software engineers can select the appropriate strategy to optimize cache performance and reduce latency while ensuring that the data stored in the cache is accurate and up-to-date.

Cache Invalidations Methods

Here are the famous cache invalidation methods:

- Purge: The purge method removes cached content for a specific object, URL, or a set of URLs. It’s typically used when there is an update or change to the content and the cached version is no longer valid. When a purge request is received, the cached content is immediately removed, and the next request for the content will be served directly from the origin server.

- Refresh: Fetches requested content from the origin server, even if cached content is available. When a refresh request is received, the cached content is updated with the latest version from the origin server, ensuring that the content is up-to-date. Unlike a purge, a refresh request doesn’t remove the existing cached content; instead, it updates it with the latest version.

- Ban: The ban method invalidates cached content based on specific criteria, such as a URL pattern or header. When a ban request is received, any cached content that matches the specified criteria is immediately removed, and subsequent requests for the content will be served directly from the origin server.

- Time-to-live (TTL) expiration: This method involves setting a time-to-live value for cached content, after which the content is considered stale and must be refreshed. When a request is received for the content, the cache checks the time-to-live value and serves the cached content only if the value hasn’t expired. If the value has expired, the cache fetches the latest version of the content from the origin server and caches it.

- Stale-while-revalidate: This method is used in web browsers and CDNs to serve stale content from the cache while the content is being updated in the background. When a request is received for a piece of content, the cached version is immediately served to the user, and an asynchronous request is made to the origin server to fetch the latest version of the content. Once the latest version is available, the cached version is updated. This method ensures that the user is always served content quickly, even if the cached version is slightly outdated.

Cache Performance Metrics

When implementing caching, it’s important to measure the performance of the cache to ensure that it is effective in reducing latency and improving system performance. Here are some of the most common cache performance metrics:

- Hit rate: The hit rate is the percentage of requests that are served by the cache without accessing the original source. A high hit rate indicates that the cache is effective in reducing the number of requests to the original source, while a low hit rate indicates that the cache may not be providing significant performance benefits.

- Miss rate: The miss rate is the percentage of requests that are not served by the cache and need to be fetched from the original source. A high miss rate indicates that the cache may not be caching the right data or that the cache size may not be large enough to store all frequently accessed data.

- Cache size: The cache size is the amount of memory or storage allocated for the cache. The cache size can impact the hit rate and miss rate of the cache. A larger cache size can result in a higher hit rate, but it may also increase the cost and complexity of the caching solution.

- Cache latency: The cache latency is the time it takes to access data from the cache. A lower cache latency indicates that the cache is faster and more effective in reducing latency and improving system performance. The cache latency can be impacted by the caching technology used, the cache size, and the cache replacement and invalidation policies.

In summary, caching is a useful technique for improving performance, reducing cost, and increasing the scalability of a system by storing frequently accessed data in fast storage called cache!

That’s all about caching in system design. If you have any queries or feedback, please write us at contact@waytoeasylearn.com. Enjoy learning, Enjoy system design..!!