Normalization and Denormalization

In this tutorial, we are going to discuss about Normalization and Denormalization in SQL. Normalization and denormalization are two opposing strategies for designing relational database schemas.

SQL Normalization

Normalization is the process of organizing data in a database to reduce redundancy and dependency. This is typically achieved by breaking down a large table into smaller, related tables and defining relationships between them using foreign keys. The main goals of normalization are to minimize data duplication, reduce the chance of anomalies (such as update anomalies, insert anomalies, and delete anomalies), and improve data integrity.

Normalization is typically divided into several normal forms (1NF, 2NF, 3NF, BCNF, etc.), each representing a higher level of normalization. Each normal form imposes certain rules and guidelines regarding the structure of the database tables.

Characteristics

- Reduces Redundancy: Avoids duplication of data.

- Improves Data Integrity: Ensures data accuracy and consistency.

- Database Design: Involves creating tables and establishing relationships through primary and foreign keys.

Example: Customer Orders Database

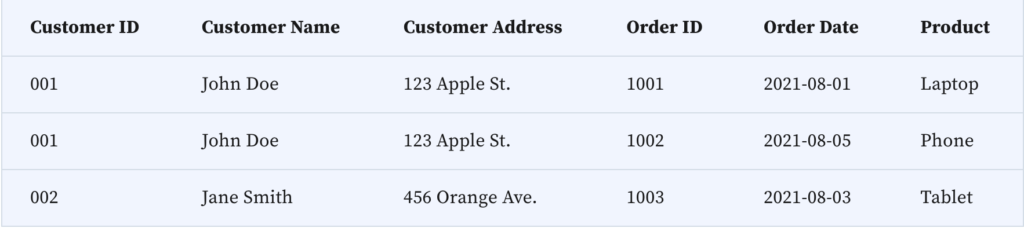

Original Table (Before Normalization)

Imagine a single table that stores all customer orders:

This table has redundancy (notice how customer details are repeated) and is not normalized.

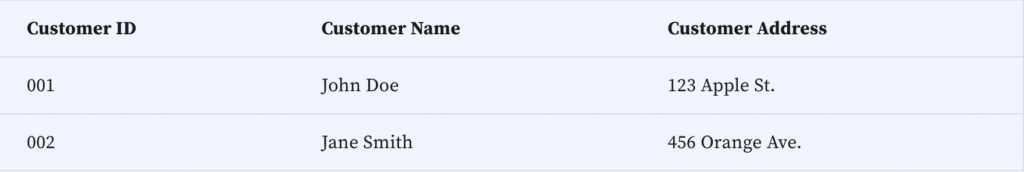

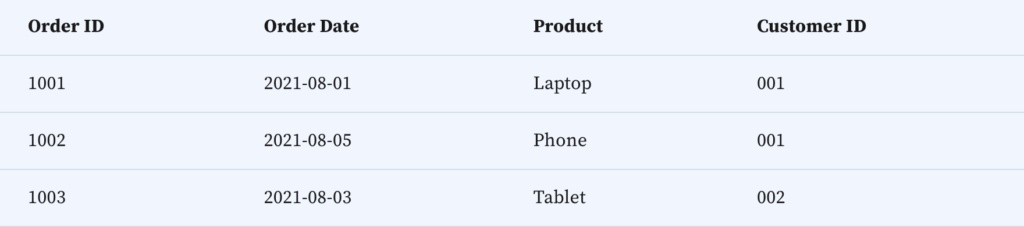

After Normalization

To normalize this, we would split it into two or more tables to reduce redundancy.

Customers Table (1NF, 2NF, 3NF)

Orders Table (1NF, 2NF, 3NF)

In the normalized structure, we’ve eliminated redundancy (each customer’s details are listed only once) and established a relationship between the two tables via CustomerID.

Levels (Normal Forms)

- 1NF (First Normal Form): Data is stored in atomic form with no repeating groups.

- 2NF (Second Normal Form): Meets 1NF and has no partial dependency on any candidate key.

- 3NF (Third Normal Form): Meets 2NF and has no transitive dependency.

Use Cases

- Ideal for complex systems where data integrity is critical, like financial or enterprise applications.

SQL Denormalization

Denormalization, on the other hand, is the process of intentionally adding redundancy to a database schema in order to improve performance or simplify queries. By storing redundant data, denormalization aims to reduce the need for joins and improve query performance. This can be particularly useful in scenarios where certain queries are frequently executed and performance is a critical concern. However, denormalization can also lead to data inconsistency and increase the complexity of data maintenance, as redundant data must be carefully managed to ensure consistency.

Characteristics

- Increases Redundancy: May involve some data duplication.

- Improves Query Performance: Reduces the complexity of queries by reducing the number of joins.

- Data Retrieval: Optimized for read-heavy operations.

Denormalization Example

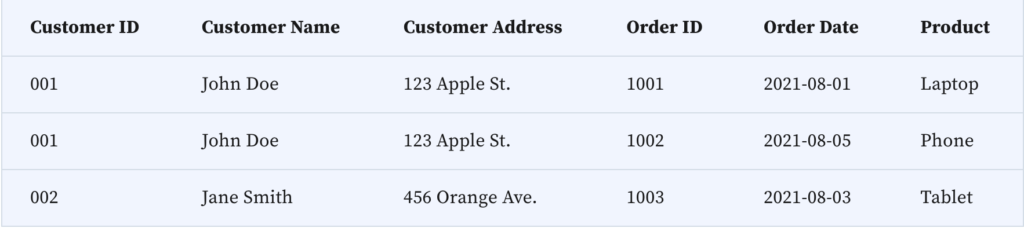

Denormalization would involve combining these tables back into a single table to optimize read performance. Taking the above table:

Denormalized Orders Table

Here, we’re back to the original structure. The benefit of this denormalized table is that it can make queries faster since all the information is in one place, reducing the need for JOIN operations. However, the downside is the redundancy of customer information, which can take up more space and potentially lead to inconsistencies if not managed properly.

When to Use

- In read-heavy database systems where query performance is a priority.

- In systems where data changes are infrequent and a slightly less normalized structure doesn’t compromise data integrity.

Key Differences

- Purpose:

- Normalization aims to minimize data redundancy and improve data integrity.

- Denormalization aims to improve query performance.

- Data Redundancy:

- Normalization reduces redundancy.

- Denormalization may introduce redundancy.

- Performance:

- Normalization can lead to a larger number of tables and more complex queries, potentially affecting read performance.

- Denormalization can improve read performance but may affect write performance due to data redundancy.

- Complexity:

- Normalization increases the complexity of the write operations.

- Denormalization simplifies read operations but can make write operations more complex.

Conclusion

- Normalization is about reducing redundancy and improving data integrity but can lead to more complex queries.

- Denormalization simplifies queries but at the cost of increased data redundancy and potential maintenance challenges.

The choice between the two depends on the specific requirements of your database system, considering factors like the frequency of read vs. write operations, and the importance of query performance vs. data integrity.

In practice, the choice between normalization and denormalization depends on the specific requirements and characteristics of the application, including factors such as the nature of the data, the expected workload, performance considerations, and the trade-offs between data consistency and query performance.

That’s all about the Normalization and Denormalization in SQL. If you have any queries or feedback, please write us email at contact@waytoeasylearn.com. Enjoy learning, Enjoy system design..!!