Cache Read Strategies

In this tutorial, we are going to discuss about cache read strategies. Cache read strategies are techniques used to determine how cached data is accessed and retrieved from a cache. These strategies aim to optimize performance by minimizing the time required to fetch data from the cache. Here are several common cache read strategies:

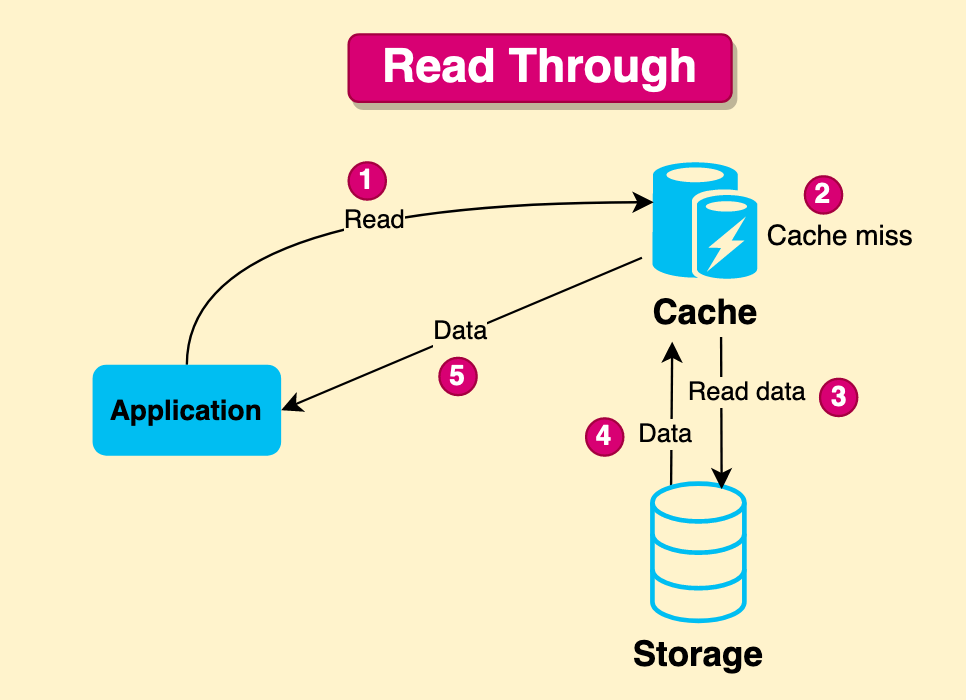

1. Read through cache

A read-through cache strategy is a caching mechanism where the cache itself is responsible for retrieving the data from the underlying data store when a cache miss occurs. In this strategy, the application requests data from the cache instead of the data store directly. If the requested data is not found in the cache (cache miss), the cache retrieves the data from the data store, updates the cache with the retrieved data, and returns the data to the application.

This approach helps to maintain consistency between the cache and the data store, as the cache is always responsible for retrieving and updating the data. It also simplifies the application code since the application doesn’t need to handle cache misses and data retrieval logic. The read-through cache strategy can significantly improve performance in scenarios where data retrieval from the data store is expensive, and cache misses are relatively infrequent.

Here’s how it works:

- Data Retrieval: When the application requests data from the cache, the cache first checks if the data is already present in its storage.

- Cache Miss: If the requested data is not found in the cache (cache miss), the cache automatically fetches the data from the underlying data source.

- Data Population: After retrieving the data from the data source, the cache stores it in its storage for future requests.

- Data Return: Finally, the cache returns the requested data to the application.

With read-through caching, the application is typically unaware of whether the data comes from the cache or the underlying data source. The caching mechanism abstracts away the data retrieval process, providing a seamless experience for the application.

2. Read aside cache

A read-aside cache strategy, also known as cache-aside or lazy-loading, is a caching mechanism where the application is responsible for retrieving the data from the underlying data store when a cache miss occurs. In this strategy, the application first checks the cache for the requested data. If the data is found in the cache (cache hit), the application uses the cached data. However, if the data is not present in the cache (cache miss), the application retrieves the data from the data store, updates the cache with the retrieved data, and then uses the data.

The read-aside cache strategy provides better control over the caching process, as the application can decide when and how to update the cache. However, it also adds complexity to the application code, as the application must handle cache misses and data retrieval logic. This approach can be beneficial in scenarios where cache misses are relatively infrequent, and the application wants to optimize cache usage based on specific data access patterns.

Here’s how it works:

- Data Retrieval: When the application requests data from the cache, the cache first checks if the data is already present in its storage.

- Cache Miss: If the requested data is not found in the cache (cache miss), the cache automatically fetches the data from the underlying data source.

- Data Population: After retrieving the data from the data source, the cache stores it in its storage for future requests.

- Data Return: Finally, the cache returns the requested data to the application.

The choice of cache read strategy depends on factors such as the application’s performance requirements, data consistency needs, and the characteristics of the underlying data source.

That’s all about the Cache Read Strategies. If you have any queries or feedback, please write us email at contact@waytoeasylearn.com. Enjoy learning, Enjoy system design..!!