Network Protocols

In this tutorial, we are going to discuss about network protocols, another important system design concept. Network protocols play a crucial role in system design, facilitating communication and data exchange between different components in a networked environment.

In computer networking, a network is a group of devices (computers, servers, etc.) connected through various communication channels (wired or wireless) to allow resource sharing, data exchange, and communication. Networks can vary in size, from small home networks to large networks like the Internet.

The devices within a network communicate with each other by following certain rules, known as network protocols. These network protocols are standardized methods for reliable and efficient data exchange.

Why Network Protocols?

- Network protocols are essential for enabling communication and data exchange between different devices and systems within a network.

- They serve as a set of rules and conventions that govern how data is transmitted, received, and processed across a network.

- They include features to detect and correct errors during data transmission: checksums, error detection codes, error correction algorithms, etc.

- Network protocols define the format of data packets so that devices can process transmitted data correctly.

- They specify how devices can exchange messages: Sequence of messages, types of messages (e.g., request, response), acknowledgements, etc.

- They implement flow control mechanisms to manage the rate of data transmission.

- Network protocols help manage network resources efficiently by specifying rules for data flow control, congestion avoidance, and quality of service (QoS). This ensures optimal utilization of available bandwidth and minimizes the risk of network congestion.

- Protocols provide a way to address and route data packets: how devices are identified, how addresses are assigned, and how routing decisions are made to send data to the desired destinations.

- The goal of several protocols is to ensure reliable data transmission. For this, they guarantee the successful delivery of data packets using mechanisms like acknowledgements, retransmissions, error recovery, etc.

- Some protocols include security features to protect data confidentiality and integrity.

- Network protocols play a crucial role in securing data during transmission. Protocols like Secure Sockets Layer (SSL) and Transport Layer Security (TLS) provide encryption and authentication mechanisms, ensuring the confidentiality and integrity of sensitive information.

- Protocols are designed to scale well with growth in the size of networks.

- Network protocols enable the use of diagnostic and monitoring tools, such as packet analyzers, to troubleshoot issues, analyze network traffic, and optimize performance. These tools rely on the standardized structure of protocols to interpret and display network data.

- Protocols optimize data transfer by defining how information is packaged, transmitted, and reconstructed. This efficiency is crucial for minimizing network congestion, reducing latency, and ensuring timely and reliable delivery of data.

In summary, network protocols are fundamental to the functioning of modern networks, providing the necessary rules and standards to ensure effective, reliable, and secure communication between devices and systems. Their role is particularly crucial in supporting the complex and interconnected nature of today’s digital ecosystems.

The OSI model of Networking

The OSI (Open Systems Interconnection) model is a structured framework to understand the system of computer networking. The OSI model does not dictate specific technologies or protocols but rather provides a conceptual framework for understanding and designing network architectures. It divides the whole system into seven different layers.

Each layer has specific responsibilities. Each layer interacts with adjacent layers to facilitate communication. Here are the seven layers of the OSI model, from the highest to the lowest:

The application layer (Layer 7)

This is the only layer that directly interacts with data from the user. Software applications like web browsers and email clients rely on the application layer to initiate communications. But it should be made clear that client software applications are not part of the application layer; rather the application layer is responsible for the protocols and data manipulation that the software relies on to present meaningful data to the user.

Application layer protocols include HTTP as well as SMTP (Simple Mail Transfer Protocol is one of the protocols that enables email communications).

The presentation layer (Layer 6)

This layer is primarily responsible for preparing data so that it can be used by the application layer; in other words, layer 6 makes the data presentable for applications to consume. The presentation layer is responsible for translation, encryption, and compression of data.

Two communicating devices communicating may be using different encoding methods, so layer 6 is responsible for translating incoming data into a syntax that the application layer of the receiving device can understand.

If the devices are communicating over an encrypted connection, layer 6 is responsible for adding the encryption on the sender’s end as well as decoding the encryption on the receiver’s end so that it can present the application layer with unencrypted, readable data.

Finally the presentation layer is also responsible for compressing data it receives from the application layer before delivering it to layer 5. This helps improve the speed and efficiency of communication by minimizing the amount of data that will be transferred.

The session layer (Layer 5)

This is the layer responsible for opening and closing communication between the two devices. The time between when the communication is opened and closed is known as the session. The session layer ensures that the session stays open long enough to transfer all the data being exchanged, and then promptly closes the session in order to avoid wasting resources.

The session layer also synchronizes data transfer with checkpoints. For example, if a 100 megabyte file is being transferred, the session layer could set a checkpoint every 5 megabytes. In the case of a disconnect or a crash after 52 megabytes have been transferred, the session could be resumed from the last checkpoint, meaning only 50 more megabytes of data need to be transferred. Without the checkpoints, the entire transfer would have to begin again from scratch.

The transport layer (Layer 4)

Layer 4 is responsible for end-to-end communication between the two devices. This includes taking data from the session layer and breaking it up into chunks called segments before sending it to layer 3. The transport layer on the receiving device is responsible for reassembling the segments into data the session layer can consume.

The transport layer is also responsible for flow control and error control. Flow control determines an optimal speed of transmission to ensure that a sender with a fast connection does not overwhelm a receiver with a slow connection. The transport layer performs error control on the receiving end by ensuring that the data received is complete, and requesting a retransmission if it isn’t.

Transport layer protocols include the Transmission Control Protocol (TCP) and the User Datagram Protocol (UDP).

The network layer (Layer 3)

The network layer is responsible for facilitating data transfer between two different networks. If the two devices communicating are on the same network, then the network layer is unnecessary. The network layer breaks up segments from the transport layer into smaller units, called packets, on the sender’s device, and reassembling these packets on the receiving device. The network layer also finds the best physical path for the data to reach its destination; this is known as routing.

Network layer protocols include IP, the Internet Control Message Protocol (ICMP), the Internet Group Message Protocol (IGMP), and the IPsec suite.

Data Link Layer (Layer 2)

The data link layer is responsible for the node-to-node delivery of the message. The main function of this layer is to make sure data transfer is error-free from one node to another, over the physical layer. When a packet arrives in a network, it is the responsibility of the Data Link Layer to transmit it to the Host using its MAC address.

Physical Layer (Layer 1)

The lowest layer of the OSI reference model is the physical layer. This layer includes the physical equipment involved in the data transfer, such as the cables and switches. This is also the layer where the data gets converted into a bit stream, which is a string of 1s and 0s. The physical layer of both devices must also agree on a signal convention so that the 1s can be distinguished from the 0s on both devices.

Types of Network Protocols

There are various types of network protocols that serve different purposes and operate at different layers of the OSI model. Each protocol has its own set of rules, formats, and functions.

Here are some commonly used network protocols:

- Internet Protocol (IP)

- Transmission Control Protocol (TCP)

- User Datagram Protocol (UDP)

- Hypertext Transfer Protocol (HTTP)

- Hypertext Transfer Protocol Secure (HTTPS)

- File Transfer Protocol (FTP)

- Simple Mail Transfer Protocol (SMTP)

- Domain Name System (DNS)

- Remote Procedure Call (RPC)

- Dynamic Host Configuration Protocol (DHCP)

Let’s move forward to discuss some of them in detail.

1. Internet Protocol (IP)

The Internet Protocol (IP) is a fundamental communication protocol that enables the transmission of data across networks, including the global network known as the Internet.

Internet Protocol (IP) is a network layer protocol. It allows data packets to be routed and addressed in order to pass through networks and reach their destination. When one machine wants to send data to another machine, it sends it in the form of IP packets.

An IP packet is the fundamental unit of data that is transmitted over the internet or any other network that uses the Internet Protocol (IP). The IP packet has two main parts: IP header and data (payload). Here size of the packet includes the size of both IP header and data section.

IP header section contains information about the packet like source and destination IP addresses, total size of the packet, version of the IP, and other control information.

Data section contains the actual data being transmitted i.e. web pages, images, or any other type of content.

Other important information in the IP header are: 1) Time-to-live (TTL) field, which determines the number of hops a packet can make before it’s discarded 2) Protocol field, which specifies the protocol being used for the data section.

When a device sends a packet, it first determines the IP address of the destination device. The packet is then sent to the first hop on the network i.e. a router. The router examines the destination IP address in the header and determines the next hop on the network to which the packet should be forwarded. This process continues until the packet reaches its final destination.

Here routing algorithms are used to determine the best path for the packets to take through the network. We will cover various routing algorithms in a separate tutorial.

What is an IP address?

IP addresses are like digital addresses that identify and locate devices on a network. They are unique and assigned to each device or domain that connects to the Internet. IP addresses are written as four numbers separated by periods, such as 192.168.15.10. These numbers represent the numerical value of the address. There are two main types of IP addresses: IPv4 and IPv6.

IPv4 vs. IPv6

IPv4 was the first version of IP introduced in 1983. It uses a 32-bit address scheme i.e. it can accommodate a maximum of 4,294,967,296 unique IP addresses. Despite its limitations, IPv4 still carries a significant chunk of internet traffic today, around 94%. IPv4 also provides several benefits like encrypted data transmission and cost-effective data routing.

With the increasing demand for IP addresses, IPv6 was developed in the 1990s to overcome the limitations of IPv4. It uses 128-bit address space, capable of accommodating 3.4 x 10^38 unique IP addresses. It is also known as IPng (Internet Protocol next generation).

IPv6 offers improved routing, packet processing and security features compared to IP4. But IPv6 is not compatible with IPv4, and upgrading can present a challenge.

2. Transmission Control Protocol (TCP)

When sending large files like images, videos or emails, a single IP packet may not be sufficient. In these cases, multiple packets are used to transmit the data. Unfortunately, this increases the risk of packets not reaching their destination, getting lost, or arriving in the wrong order. That’s where the transport layer protocol comes into the picture.

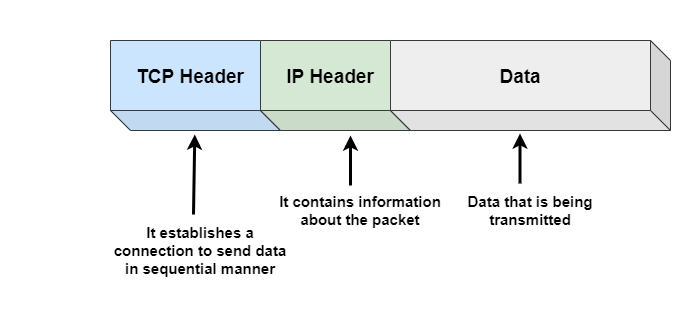

Transmission Control Protocol (TCP) is a transport layer protocol, which is built on top of the Internet Protocol (IP). It is used in conjunction with the IP to provide a complete suite of communication protocols for transmitting data.

- TCP breaks data into small packets and numbers them in sequence to ensure that they are received in the correct order at the receiving end. Lost data packets can also be retransmitted using TCP. Overall, IP is responsible for delivering the packets, while TCP helps to put them back in the correct order.

- When a machine wants to communicate with another machine using TCP, it establishes a connection with the destination machine through a sequenced acknowledgement (a handshake process). Once the connection is established, the two machines can communicate freely. In simple words, TCP is a connection-oriented protocol, where connection must be established between applications before data transfer can occur.

- It also provides error-checking mechanisms to ensure that the data is received without any errors.

Lets explore how TCP works:

Connection establishment: The sender and receiver establish a connection using a three-way handshake before transmitting data. The sender sends an SYN (synchronize) packet to the receiver, the receiver responds with an SYN-ACK (synchronise-acknowledgement) packet, and the sender sends an ACK (acknowledgement) packet to complete the connection.

Packet creation: Now sender divides data into TCP segments, with each segment containing a sequence number to ensure the data is reassembled correctly on the receiver. The segments also contain other information like source port number, destination port number, and checksums for error detection.

Packet transmission: Now TCP segments are sent over the network to the destination IP address and port number. TCP uses flow control mechanisms to ensure that the sender does not overwhelm the receiver with too much data at once.

Packet delivery: Now destination receives the TCP segments and reassembles them into the original data. If a segment is lost or corrupted, the receiver sends a request for retransmission to the sender.

Connection termination: Once data transmission is complete, the sender and receiver initiate a connection termination using a four-way handshake. The sender sends a FIN (finish) packet to the receiver, the receiver sends an ACK packet to acknowledge the FIN, and then sends its own FIN packet. The sender responds with an ACK packet to acknowledge the receiver’s FIN packet.

Use cases of TCP in real life applications

TCP is used by a wide range of applications. Here are some examples:

- Email applications like Outlook or Gmail use the Simple Mail Transfer Protocol (SMTP) over TCP to send and receive email messages.

- When you browse the internet, your web browser uses HTTP (Hypertext Transfer Protocol) or its secure version, HTTPS, which relies on TCP for communication. TCP ensures that web pages are reliably and accurately delivered to your browser, facilitating ordered data transfer.

- When you transfer files using protocols like FTP or SFTP, these protocols use TCP to ensure the reliable transmission of the data.

- Secure Shell (SSH), used for secure remote access and command execution on a server, operates over TCP. TCP’s reliability is crucial for ensuring secure and ordered communication between the client and server during remote sessions.

- Remote desktop software uses TCP to transmit desktop screen data and user inputs between the client and server.

- Database management systems, like MySQL and PostgreSQL, use TCP to establish connections between clients and database servers. TCP ensures the integrity and reliability of queries, responses, and data transfers during database interactions.

- RESTful APIs, which are commonly used in web services and cloud applications, often rely on HTTP or HTTPS over TCP. This ensures reliable communication between clients and servers when retrieving or sending data.

- VPN protocols, such as the Point-to-Point Tunneling Protocol (PPTP) or Layer 2 Tunneling Protocol (L2TP), use TCP for establishing secure connections between the client and the VPN server. TCP’s reliability is crucial for maintaining a stable and secure VPN connection.

3. User Datagram Protocol (UDP)

UDP is a connection less transport layer protocol. Unlike TCP, UDP does not provide any reliability, flow control, or error recovery functions. As a result, UDP is useful in situations where reliability mechanisms of TCP are not necessary and faster transmission is desired. In other words, UDP is useful in applications where speed and low latency are more important than reliability. Here dropped packets can be tolerated without significant impact on the application performance.

Lets explore how UDP works:

Packet creation: Sender encapsulates the data into a UDP datagram, which includes source and destination ports, as well as the length of the datagram.

Packet transmission: UDP datagram is then sent over the network to the destination IP address and port number. UDP does not establish a connection before transmitting data, so the data is simply sent to the destination without any handshaking or connection setup.

Packet delivery: Destination receives UDP datagram and processes the data within the datagram. Since UDP is an unreliable protocol, it does not provide any mechanism for detecting lost or corrupted packets. So the receiving application must implement its own error detection and recovery mechanisms if required.

Packet acknowledgement:

If the receiving application requires acknowledgement of receipt, it can send a response packet back to the sender, indicating that the data was received successfully.

Use cases of UDP in real-life applications

User Datagram Protocol (UDP) is commonly used in various real-life applications where low latency and rapid data transmission are prioritized over the guarantees of reliability provided by protocols like TCP. Here are some use cases of UDP in real-world applications:

- TCP is a reliable protocol but this reliability comes at the cost of latency. In applications like online gaming, Video streaming (YouTube or Netflix) or video conferencing (Skype or Zoom), low latency is crucial for a good user experience. So in such cases, UDP can be a better choice. Here dropped packets can be tolerated in favor of speed.

- Low-power devices like IoT sensors have limited processing power and battery life, and the overhead of establishing a TCP connection can be too high. So in such cases, one can use lightweight protocols like Constrained Application Protocol (CoAP), which rely on UDP.

- DHCP, responsible for dynamically assigning IP addresses to devices on a network, commonly utilizes UDP. DHCP messages are small and involve simple exchanges, making UDP a suitable choice for quickly configuring network devices without the overhead of TCP connections.

- DNS (Domain Name System) primarily uses UDP for communication between DNS servers and clients. But if a DNS query or response packet is too large to fit within a single UDP datagram, then DNS can use TCP to transmit the packet in multiple parts. In such cases, DNS uses TCP as a fallback protocol.

- TFTP, a simple file transfer protocol, operates over UDP. While TFTP sacrifices reliability for simplicity and speed, it is suitable for use cases such as transferring configuration files to network devices during the boot process.

4. HyperText Transfer Protocol (HTTP)

Hypertext Transfer Protocol (HTTP) is the foundation of any data exchange on the Web. It is an application layer protocol that facilitates the transfer of hypermedia documents, such as HTML (Hypertext Markup Language) documents. HTTP is a stateless protocol, meaning that each request from a client to a server is independent and unrelated to previous requests.

It is built on top of Transmission Control Protocol (TCP) and provides a higher level of abstraction that allow developers to focus on their applications rather than the details of TCP and IP packets. Here are some key aspects of HTTP.

Request-Response Model:

HTTP follows a simple request-response model. A client, typically a web browser, sends an HTTP request to a server, and the server responds with the requested information or an error message.

Uniform Resource Identifier (URI):

URIs, including URLs (Uniform Resource Locators), are used to identify and locate resources on the web. In an HTTP request, the client specifies the URI of the resource it wants, and the server responds accordingly.

Methods (Verbs):

HTTP defines several methods, also known as verbs, to indicate the action the client wants to perform on a resource. Common methods include:

- GET: Retrieve a resource.

- POST: Submit data to be processed to a specified resource.

- PUT: Update a resource or create a new resource if it doesn’t exist.

- DELETE: Request removal of a resource.

- HEAD: Retrieve only the headers of a resource without the actual data.

Status Codes:

HTTP status codes are three-digit numbers returned by the server to indicate the status of the requested resource. Common status codes include:

- 200 OK: The request was successful.

- 404 Not Found: The requested resource could not be found.

- 500 Internal Server Error: A generic error message returned by the server.

Headers:

HTTP headers provide additional information about the request or response. They include details such as content type, content length, caching directives, and more. Headers are essential for controlling the behavior of the communication between the client and the server.

Stateless Protocol:

HTTP is stateless, meaning that each request from a client is independent of previous requests. The server does not retain any information about the client’s previous requests, which simplifies the protocol but requires additional mechanisms (such as cookies) for handling stateful interactions.

Versioning:

HTTP has undergone multiple versions, with HTTP/1.1 being the widely used version. The latest version, HTTP/2, introduced improvements in performance, multiplexing, and header compression. HTTP/3, based on the QUIC transport protocol, is also gaining adoption.

Secure Version (HTTPS):

HTTPS (Hypertext Transfer Protocol Secure) is a secure extension of HTTP. It uses encryption protocols such as TLS (Transport Layer Security) to ensure the confidentiality and integrity of data exchanged between the client and server. URLs that use HTTPS begin with “https://” instead of “http://”.

Cookies:

Cookies are used to store small pieces of data on the client’s side, enabling stateful interactions in a stateless protocol. They are commonly used for session management, tracking user preferences, and maintaining user authentication.

RESTful APIs:

HTTP is often used as the foundation for building RESTful (Representational State Transfer) APIs. RESTful APIs use HTTP methods to perform operations on resources, and data is typically exchanged in formats like JSON or XML.

HTTP has several benefits that make it a popular choice for transmitting information over the web. It requires low memory and CPU usage due to its few concurrent connections. Additionally, it has the ability to report errors without closing connections, which can reduce network congestion. But HTTP is not equipped with encryption capabilities, so it may not be the most secure option for transmitting sensitive information.

5. Hypertext Transfer Protocol Secure (HTTPS)

Hypertext Transfer Protocol Secure (HTTPS) is a secured version of Hypertext Transfer Protocol (HTTP). Unlike HTTP, which transfers data in a plain text format, HTTPS transfers data in an encrypted format and protects it from interpretation or modification by hackers during the transfer of packets.

It is often used when sensitive information like passwords and financial transactions is transmitted. But HTTPS may be slower than HTTP due to the overhead of the encryption and decryption process.

Key features and aspects of HTTPS include:

Encryption with SSL/TLS:

HTTPS uses SSL (Secure Sockets Layer) or its successor, TLS (Transport Layer Security), to encrypt the data transmitted between the client (web browser) and the server. This encryption ensures that the information exchanged, including usernames, passwords, and financial transactions, is secure and protected from eavesdropping or tampering.

URL Scheme:

URLs that use HTTPS start with “https://” instead of the non-secure “http://”. This indicates to users that the communication with the website is encrypted and secure.

Data Integrity:

The use of cryptographic protocols in HTTPS not only encrypts the data but also ensures its integrity. This means that the data received by the client has not been altered during transmission.

Authentication:

HTTPS provides a level of authentication, helping users verify that they are communicating with the legitimate website and not an impostor. This is achieved through digital certificates issued by trusted Certificate Authorities (CAs).

Search Engine Ranking:

Search engines, such as Google, prioritize websites with HTTPS in their search rankings. Having HTTPS not only enhances security but can also positively impact a website’s visibility and trustworthiness.

Security Headers:

Websites can implement security headers, such as HTTP Strict Transport Security (HSTS), to enforce the use of HTTPS and protect against certain types of attacks, like man-in-the-middle attacks.

6. File Transfer Protocol (FTP)

File Transfer Protocol (FTP) is a standard network protocol used to transfer files between a client and a server on a computer network. It runs on top of Transmission Control Protocol (TCP) and creates two TCP connections: control connection and data connection. Control connection is used to transfer control information like passwords and commands to retrieve and store files, while data connection is used to transfer the actual file. Both connections run in parallel during the entire file transfer process.

FTP has several advantages: ability to share large files at the same time, resume file sharing if it is interrupted, recover lost data and schedule file transfers.

FTP has some disadvantages as well. It lacks security, as data, usernames, and passwords are transferred in plain text, which makes them vulnerable to malicious actors. FTP also lacks encryption capabilities, which makes it non-compliant with industry standards.

FTP requires user authentication to access files on the server. Users typically provide a username and password to log in. However, FTP does not encrypt login credentials, making it susceptible to eavesdropping. For secure authentication, FTP can be paired with protocols like FTPS (FTP Secure) or SFTP (SSH File Transfer Protocol).

7. Simple Mail Transfer Protocol (SMTP)

Simple Mail Transfer Protocol (SMTP) is a standard protocol used for the transmission of electronic mail (email) across networks. SMTP is part of the application layer of the Internet Protocol Suite and works in conjunction with other email-related protocols like POP3 (Post Office Protocol 3) and IMAP (Internet Message Access Protocol) to handle the sending, receiving, and storage of emails.

SMTP is a fundamental protocol for email communication, serving as the backbone for the transmission of messages across the internet.

SMTP is primarily responsible for sending outgoing emails from a client (email sender) to a server (email recipient) or between mail servers. It is used to transmit the content, recipient addresses, and other relevant information of the email message.

SMTP follows a client-server model. An email client (such as Microsoft Outlook or Mozilla Thunderbird) acts as the client, and an email server (Mail Transfer Agent or MTA) acts as the server. The client initiates the connection to the server to send an email.

SMTP uses a simple text-based protocol for communication. The message format includes headers and body. Headers contain information such as sender and recipient addresses, subject, and date, while the body contains the actual content of the email.

SMTP traditionally uses port 25 for communication. However, for secure email transmission, SMTP over TLS (SMTPS) can use port 587. Port 465 is also associated with SMTP over SSL, though it is considered deprecated.

8. Domain Name System (DNS)

The Domain Name System (DNS) is a hierarchical and distributed naming system that translates user-friendly domain names into numerical IP addresses, facilitating the routing of data on the Internet. DNS plays a crucial role in enabling users to access websites and services using human-readable domain names instead of numeric IP addresses.

Each device connected to the Internet has a unique IP address which other machines use to find the device. DNS servers eliminate the need for humans to memorize IP addresses such as 192.168.1.1 (in IPv4), or more complex newer alphanumeric IP addresses such as 2400:cb00:2048:1::c629:d7a2 (in IPv6).

What is the Need of DNS?

Every host is identified by the IP address but remembering numbers is very difficult for people also the IP addresses are not static therefore a mapping is required to change the domain name to the IP address. So DNS is used to convert the domain name of the websites to their numerical IP address.

Domain Name Components:

A fully qualified domain name (FQDN) consists of multiple components, separated by dots. For example, in the FQDN “www.waytoeasylearn.com“:

- “www” is the host or subdomain.

- “waytoeasylearn” is the second-level domain.

- “com” is the top-level domain (TLD).

What is a DNS Server?

A DNS server is a computer with a database containing the public IP addresses associated with the names of the websites an IP address brings a user to. DNS acts like a phone book for the internet. Whenever people type domain names, like gmail.com or Yahoo.com, into the address bar of web browsers, the DNS finds the right IP address. The site’s IP address is what directs the device to go to the correct place to access the site’s data.

Once the DNS server finds the correct IP address, browsers take the address and use it to send data to content delivery network (CDN) edge servers or origin servers. Once this is done, the information on the website can be accessed by the user. The DNS server starts the process by finding the corresponding IP address for a website’s uniform resource locator (URL).

Name Resolution Process

When a user enters a domain name in a web browser, the operating system or a local DNS resolver initiates a process to resolve the domain to its corresponding IP address. The steps include:

- Checking the local cache for a previous resolution.

- Contacting the local DNS resolver.

- Querying authoritative DNS servers to obtain the IP address.

DNS Caching

To improve efficiency and reduce the load on DNS servers, DNS resolvers and servers implement caching. Records obtained from authoritative DNS servers are stored in caches for a specified time (Time-to-Live or TTL), allowing subsequent queries for the same domain to be resolved more quickly.

9. Remote Procedure Call (RPC)

Remote Procedure Call (RPC) allows a program on one device to request a service from a program on another device, without the need to understand details of the network. It is used for interprocess communication in client-server based applications.

The goal of RPC is to simplify the development of distributed applications by abstracting the complexity of communication between processes on different machines.

RPC works on a client-server model, where requesting program is the client and service providing program is the server. It uses either Transmission Control Protocol (TCP) or the User Datagram Protocol (UDP) to carry messages between communicating programs.

There are several advantages to using RPC. It can improve performance by omitting many protocol layers and minimize code rewriting or redevelopment efforts. However, RPC has not yet been proven to work effectively over wide-area networks and does not support other transport protocols besides TCP/IP.

RPC is a fundamental building block for developing distributed systems. It enables the development of applications that can span multiple machines or nodes in a network, allowing for better resource utilization and scalability.

10. Dynamic Host Configuration Protocol (DHCP)

The Dynamic Host Configuration Protocol (DHCP) is a network protocol used to automate the process of assigning and configuring IP addresses and other network parameters to devices on a network. DHCP eliminates the need for manual configuration of network settings, making it easier to manage and scale networks.

DHCP dynamically assigns IP addresses to devices on a network. When a device (a client) joins the network or requests an IP address lease renewal, it sends a DHCP request to the DHCP server. The server responds with an available IP address and other configuration parameters.

In addition to IP addresses, DHCP can provide various configuration information to clients, including subnet masks, default gateways, DNS (Domain Name System) servers, and other network-related settings. This automates the configuration process for devices joining the network.

The DHCP process involves at least one DHCP server and one DHCP client. The server is responsible for managing and allocating IP addresses, while the client is the device requesting network configuration information. Networks may have multiple DHCP servers for redundancy and load distribution.

Challenges with Network Protocols

While network protocols play a crucial role in enabling communication and data exchange in computer networks, there are several challenges associated with their design, implementation, and usage.

- Handling large amounts of data, traffic, and devices can affect protocol performance.

- Some network protocols designed for specialized applications can add significant overhead. Due to this, they can consume network resources and impact performance.

- Achieving fault tolerance and reliable data transfer in different network conditions can be challenging.

- Sometimes, different systems may implement protocols differently or support different versions of protocols. This can lead to compatibility issues.

- Security is a major challenge with network protocols. Threats such as eavesdropping, man-in-the-middle attacks, and packet sniffing can compromise the confidentiality and integrity of transmitted data. Designing network protocols with robust security features, implementing encryption, and regularly updating security measures are essential to mitigate these challenges.

- As networks grow in size and complexity, scalability becomes a challenge. Some network protocols may struggle to handle a large number of devices or users, leading to congestion, reduced performance, and increased latency. Scalability challenges often require protocol enhancements or the adoption of more scalable alternatives.

That’s all about network protocols in system design. If you have any queries or feedback, please write us at contact@waytoeasylearn.com. Enjoy learning, Enjoy system design..!!