Service Networking

In this tutorial, we are going to discuss about service networking. In the previous tutorials we discussed about POD networking. How bridge networks are created within each node and how PODs get a namespace created for them and how interfaces are attached to those name spaces and how parts get an IP address assigned to them within the subnet assigned for that node.

And we also discussed through routes or other overlay techniques, we can get the PODs in different nodes to talk to each other forming a large virtual network where all PODs can reach each other.

Now you would rarely configure your PODs to communicate directly with each other. If you want a POD to access services hosted on another pod you would always use a service.

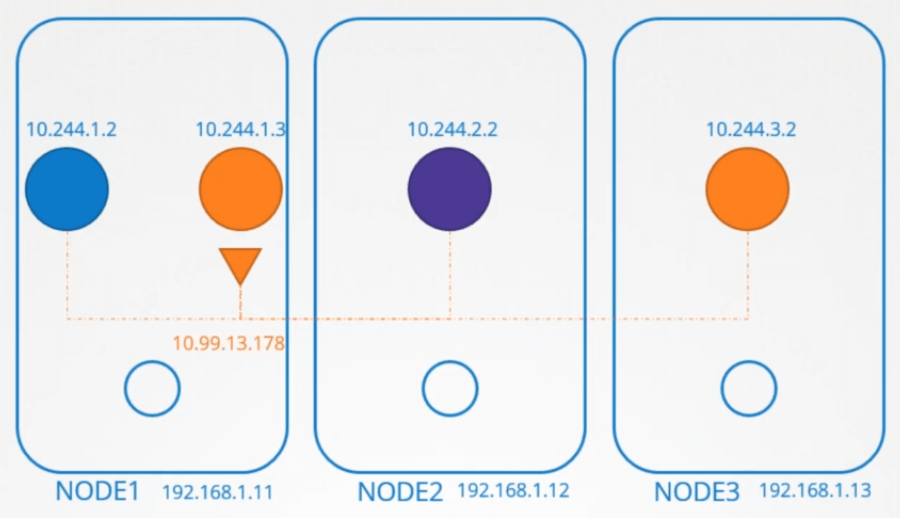

Let’s quickly recap the different kinds of services. To make the orange pod accessible to the blue pod, we create an orange service. The orange service gets an IP address and a name assigned to it.

The blue pod can now access the orange pod through the orange services IP or its name. We’ll talk about name resolution in the upcoming tutorials.

For now let’s just focus on IP addresses. The blue and orange POD around the same node. What about access from the other PODs on other nodes?

ClusterIP

When a service is created it is accessible from all parts of the cluster, irrespective of what nodes the PODs are on. While a pod is hosted on a node, a service is hosted across the cluster. It is not bound to a specific node.

But remember, the service is only accessible from within the cluster. This type of service is known as ClusterIP.

If the orange POD was hosting a database application that is to be only accessed from within the cluster, then this type of service works just fine.

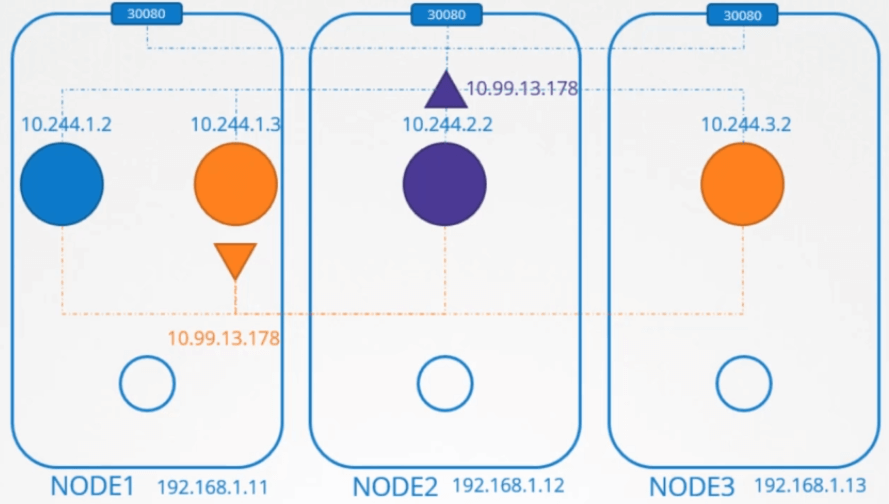

NodePort

Say for instance the purple pod was hosting a web application. To make the application on the POD accessible outside the cluster, we create another service of type NodePort.

This service also gets an IP address assigned to it and works just like ClusterIP. As in all the other PODs can access this service using it’s IP.

But, in addition it also exposes the application on a port on all nodes in the cluster. That way external users or applications have access to the service.

So that’s the topic of our discussion for this tutorial. Our focus is more on services and less on PODs.

Services

How are the services getting these IP addresses and how are they made available across all the nodes in the cluster? How is the service made available to external users through a port on each node. Who is doing that and how and where do we see it.

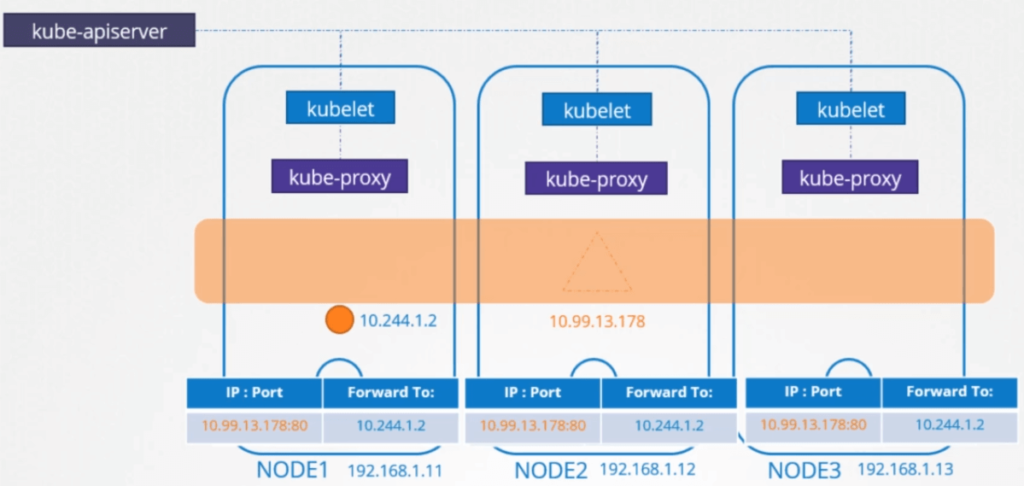

So let’s get started. Let’s start on a clean slate. We have a three node cluster, no pods or services yet. We know that every Kubernetes node runs a kubelet process, which is responsible for creating PODs.

Each kubelet service on each node watches the changes in the cluster through the kube-api server, and every time a new POD is to be created, it creates the POD on the nodes.

It then invokes the CNI plugin to configure networking for that POD. Similarly, each node runs another component known as kube-proxy.

Kube-proxy watches the changes in the cluster through kube-api server, and every time a new service is to be created, kube-proxy gets into action.

Unlike PODs, services are not created on each node or assigned to each node. Services are a cluster wide concept. They exist across all the nodes in the cluster.

As a matter of fact they don’t exist at all. There is no server or service really listening on the IP of the service. We have seen that PODs have containers and containers have namespaces with interfaces and IP’s assigned to those interfaces.

With services Nothing like that exists. There are no processes or name spaces or interfaces for a service. It’s just a virtual object. Then how do they get an IP address and how were we able to access the application on the POD through service?

When we create a service object in Kubernetes, it is assigned an IP address from a pre-defined range.

The kube-proxy components running on each node, get’s that IP address and creates forwarding rules on each node in the cluster, saying any traffic coming to this IP, the IP of the service, should go to the IP of the POD.

Once that is in place, whenever a POD tries to reach the IP of the service, it is forwarded to the PODs IP address which is accessible from any node in the cluster. Now remember it’s not just the IP it’s an IP and port combination.

How kube-proxy rules

Whenever services are created or deleted the kube-proxy component creates or deletes these rules.

So how are these rules created? kube-proxy supports different ways, such as userspace where kube-proxy listens on a port for each service and proxies connections to the PODs by creating ipvs rules or the third and the default option and the one familiar to us is using IP tables.

The proxy mode can be set using the proxy mode option while configuring the kube-proxy service. If this is not set, it defaults to iptables. So we’ll see how iptables are configured by kube-proxy and how you can view them on the nodes.

$ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx 1/1 Running 0 1m 10.244.1.2 node-1We have a pod named db deployed on node-1. It has IP address 10.244.1.2. We create a service of type ClusterIP to make this POD available within the cluster.

Get the Service

When the service is created kubernetes assigns an IP address to it. It is set to 10.103.132.104. This range is specified in the kube-apiservers option called service-cluster-ip-range. Which is by default set to 10.0.0.0/24.

$ kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

db-service ClusterIP 10.103.132.104 3306/TCP 12hIn my case, if I look at my kube-api-server option.

Check the Service Cluster IP Range

$ ps aux | grep kube-api-server

kube-apiserver --authorization-mode=Node,RBAC --service-cluster-ip-range=10.96.0.0/12I see it is set to 10.96.0.0/12. That gives my services IP any where from 10.96.0.0 to 10.111.255.255. A relative point to mention here.

When I setup my POD networking, I provided a POD network CIDR range of 10.244.0.0/16 which gives my pods IP addresses from 10.244.0.0 to 10.244.255.255.

The reason I brought this up here is because whatever range is specified for each of these networks it shouldn’t overlap which it doesn’t in this case.

Both of these should have its own dedicated range of IPs to work with. There shouldn’t be a case where a POD and a service are assigned the same IP address.

So getting back to services. That’s how my service got an IP address of 10.103.132.104. You can see the rules created by kube-proxy in the iptables nat table output.

$ iptables -L -t nat | grep local-clusterSearch for the name of the service as all rules created by kube-proxy have a comment with the name of the service on it.