Cluster Networking

In this tutorial, we are going to discuss about cluster networking. We will look at networking configurations required on the master and worker nodes in a Kubernetes cluster.

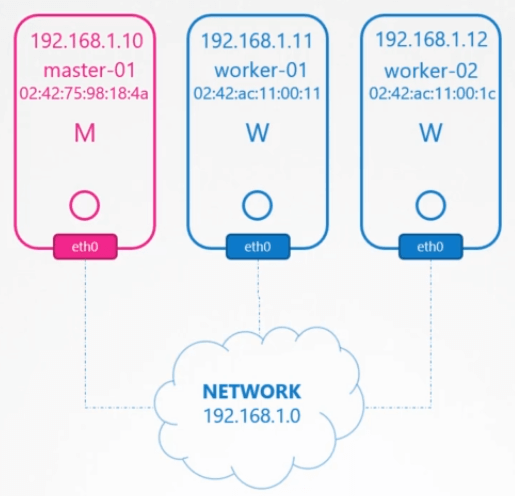

The Kubernetes cluster consists of master and worker nodes. Each node must have at least 1 interface connected to a network. Each interface must have an address configured.

The hosts must have a unique hostname set. As well as a unique MAC address. You should note this especially if you created the VMs by cloning from existing ones. There are some ports that needs to be opened as well.

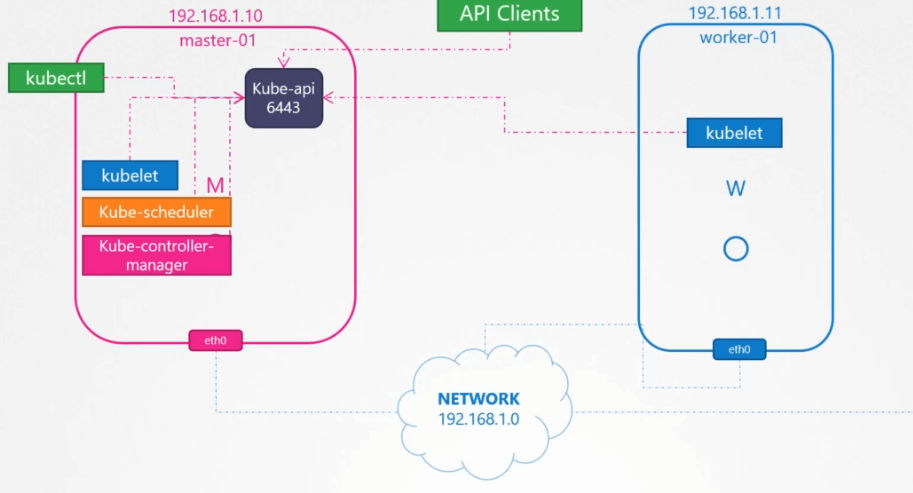

These are used by the various components in the control plane. The master should accept connections on 6443 for the API server.

The worker nodes, Kubectl tool, external users, and all other control plane components access the kube-api server via this port.

The kubelets on the master and worker nodes listen on Yes, in case we didn’t discuss this, the kubelet’s can be present on the master node as well.

The kube-scheduler requires port 10251 to be open. The kube-controller-manager requires port 10252 to be open. The worker nodes expose services for external access on ports 30000 to 32767. So these should be open as well. Finally, the ETCD server listens on port 2379.

If you have multiple master nodes, all of these ports need to be open on those as well. And you also need an additional port 2380 open so the ETCD clients can communicate with each other.

Documentation

The list of ports to be opened are also available in the Kubernetes documentation page.

So consider these when you setup networking for your nodes, in your firewalls, or IP table rules or network security group in a cloud environment such as GCP or Azure or AWS. And if things are not working this is one place to look for while you are investigating.