Docker Networking

In this tutorial, we will look into networking in docker. We will start with a basic networking options and then try and related to the concepts around networking name spaces.

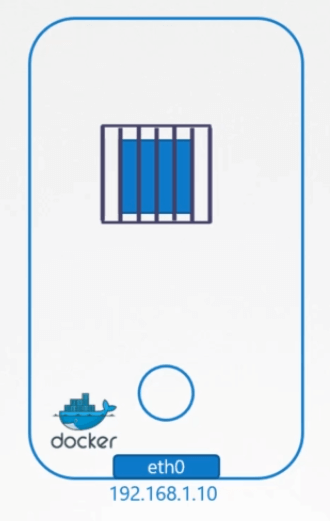

Let’s start with a single Docker Host. A server with Docker installed on it. It has an ethernet interface at eth0 that connects to the local network with the IP address 192.168.1.10.

When you run a container you have different networking options to choose from. When you install Docker it creates 3 networks automatically.

1. None

With the None network, the docker container is not attached to any network. The container cannot reach the outside world and no one from the outside world can reach the container.

If you run multiple containers they are all created without being part of any network and cannot talk to each other or to the outside world.

2. Host

With the host network, the container is attached to the host’s network. There is no network isolation between the host and the container.

If you deploy a web application listening on port 80 in the container then the web application is available on port 80 on the host without having to do any additional port mapping.

If you try to run another instance of the same container that listens on the same port it won’t work If you try to run another instance of the same container that listens on the same port it won’t work.

3. bridge

In this case, an internal private network is created which the docker host and containers attach to. The network has an address 172.17.0.0 by default and each device connecting to this network get their own internal private network address on this network.

This is the network that we are most interested in. So we will take a deeper look at how exactly docker creates and manages this network.

When Docker is installed on the host it creates an internal private network called bridge by default. You can see this when you run the following command

$ docker network ls

NETWORK ID NAME DRIVER SCOPE

4974cba36c8e bridge bridge local

0e7b30a6c996 host host local

a4b19b17d2c5 none null localNow, docker calls the network by the name “bridge”. But on the host the network is created by the name docker 0. You can see this in the output of the ip link command.

$ ip link

or

$ ip link show docker0

3: docker0: mtu 1500 qdisc noqueue state DOWN mode DEFAULT group default

link/ether 02:42:cf:c3:df:f5 brd ff:ff:ff:ff:ff:ffDocker internally uses a technique similar to what we discussed in the tutorial on namespaces by running the IP link add command with the type set to bridge.

$ ip link add docker0 type bridgeSo remember, the name bridge in the docket network ls output refers to the name docker 0 on the host. They are one and the same thing.

Also note that the interface or network is currently down. Now, remember we said that the bridge network is like an interface to the host, but a switch to the namespaces or containers within the host.

So the interface docker0 on the host is assigned an IP 172.17.0.1. You can see this in the output of the following command.

$ ip addr

or

$ ip addr show docker0

3: docker0: mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:cf:c3:df:f5 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/24 brd 172.17.0.255 scope global docker0

valid_lft forever preferred_lft foreverWhenever a container is created Docker creates a network namespace for it just like how we created network namespaces in the previous tutorial.

List the Network Namespace

Run the following command to list the namespace.

$ ip netns

b31657505bfbPlease note that there is a minor hack to be done to get the ip netns command to list the namespaces created by Docker. The namespace has the name starting b3165. You can see the namespace associated with each container in the output of the docker inspect command.

So how does docker attach the container or its network namespace to the bridge network? For the remainder of this tutorial. Container and network namespace mean the same thing. When I say container I’m referring to the network namespace created by Docker for that container.

Attach the container to the bridge

So how does docker attach the container to the bridge? As we did before it creates a cable, a virtual cable, with two interfaces on each end. Let’s find out what Docker has created here.

If you run the ip link command on the docker host you see one end of the interface which is attached to the local bridge docker 0.

$ ip link

.....

.....

4: docker0: mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether 02:42:c8:3a:ea:67 brd ff:ff:ff:ff:ff:ff

5: vetha3e33331@if3: mtu 1500 qdisc noqueue master docker0 state UP mode DEFAULT group default

link/ether e2:b2:ad:c9:8b:98 brd ff:ff:ff:ff:ff:ff link-netnsid 0If you run the same command again this time with the –n option with the namespace, then it lists the other end of the interface within the container namespace.

$ ip -n 04acb487a641 link

1: lo: mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

3: eth0@if8: mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether c6:f3:ca:12:5e:74 brd ff:ff:ff:ff:ff:ff link-netnsid 0The interface also gets an IP assigned within the network. You can view this by running the ip addr command but within the container’s namespace. The container gets assigned 172.17.0.3.

$ ip -n 04acb487a641 addr

3: eth0@if8: mtu 1500 qdisc noqueue state UP group default

link/ether c6:f3:ca:12:5e:74 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.3/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft foreverYou can also view this by attaching to the container and looking at the IP address assigned to it that way.

The same procedure is followed every time a new container is created. Docker creates a namespace. Creates a pair of interfaces. Attaches one end to the container and another end to the present. The interface pairs can be identified using their numbers.

Odd and even former pair 9 and 10 are one pair. 7 and 8 are another and 11 and 12 are one pair. The containers are all part of the network now they can all communicate with each other.

Port Mapping

Let us look at port mapping now. The container we created is nginx, so it’s a web application serving web page on port 80. Since our container is within a private network inside the host.

Only other containers in the same network or the host itself can access this Web page. If you tried to access the web page using curl with the IP of the container from within Docker host on port 80 you will see the web page.

If you try to do the same thing outside the host, you cannot view the web page. To allow external users to access the applications hosted on containers, docker provides a port publishing or port mapping option.

When you run container Tell Docker to map port 8080 on the Docker host to port 80 on the container. With that done, you could access the web application using the IP of the docker host and port 8080.

$ docker run -itd -p 8080:80 nginx

e7387bbb2e2b6cc1d2096a080445a6b83f2faeb30be74c41741fe7891402f6b6Any traffic to port 8080 on the docker host will be forwarded to port 80 on the container. Now all of your external users and other applications or service can use this URL to access the application deployed on the host.

$ curl http://172.17.0.3:8080

Welcome to nginx!But how does docker do that? How does it forward traffic from one port to another? What would you do?

Let’s forget about Docker and everything else for a second. The requirement is to forward traffic coming in on one port to another port on the server. We create a NAT rule for that.

Configuring iptables nat rules

Using iptables we create an entry into the NAT table, to append a rule to the PREROUTING chain to change the destination port from 8080 to 80.

$ iptables \

-t nat \

-A PREROUTING \

-j DNAT \

--dport 8080 \

--to-destination 80Docker does it the same way. Docker adds the rule to the docker chain and sets destination to include the containers IP as well.

$ iptables \

-t nat \

-A DOCKER \

-j DNAT \

--dport 8080 \

--to-destination 172.18.0.6:80You can see the rule docker creates when you list the rules in iptables.

$ iptables -nvL -t nat