Container Storage Interface

In this tutorial, we will take a look at Container Storage Interface (CSI). In the past Kubernetes used Docker alone as the container runtime engine, and all the code to work with Docker was embedded within the Kubernetes source code.

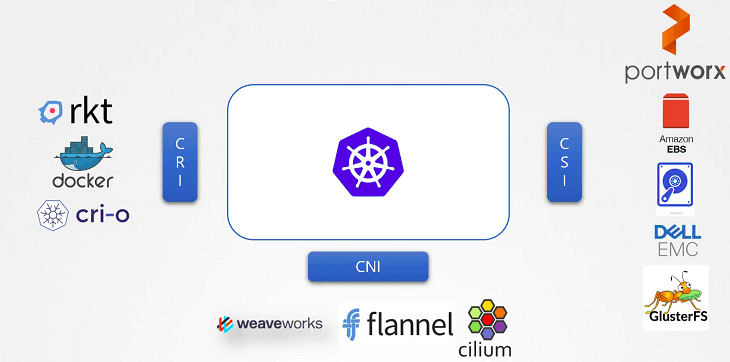

With other container runtimes coming in, such as rkt and CRI-O. It was important to open up and extend support to work with different container runtimes and not to dependent on Kubernetes source code and thats how container runtime interface came to be.

Container Runtime Interface (CRI)

The Container Runtime Interface is a standard that defines how an orchestration solution like Kubernetes would communicate with container runtimes like Docker. If any new container runtime interface is developed, they can simply follow the CRI standards.

The new Container Runtime interface would work with Kubernetes without really having to work with Kubernetes developers or touch the Kubernetes source code.

Similarly to extend support for different network solutions like weaveworks, flannel, cilium etc Container network interface was introduced.

Container Networking Interface (CNI)

To support different networking solutions, the container networking interface was introduced. Any new networking vendors could simply develop their plugin based on the CNI standards and make their solution work with Kubernetes.

Container Storage Interface (CSI)

The container storage interface was developed to support multiple storage solutions. With CSI, you can now write your own drivers for your own storage to work with Kubernetes. Portworx, Amazon EBS, Azure Disk, NetApp, Dell EMC isilon, Xtream IO, Nutanix, Pure storage GlusterFS etc have their own CSI drivers.

Please note that CSI is not a Kubernetes specific standard. It is meant to be a universal standard and if implemented, allows any container orchestration tool to work with any storage vendor with a supported plugin. Currently Kubernetes, Cloud Foundry and Mesos are onboard with CSI.

It defines a set of RPCs or remote procedure calls that will be called by the container orchestrator. These must be implemented by the storage drivers.

For example, CSI says that when a POD is created it requires a volume the container orchestrator (in our case Kubernetes) should call the create volume RPC, a set of details such as the volume name.

The storage driver should implement this RPC and handle that request and provision a new volume on the storage array and return the results of the operation.

Similarly, container orchestrator should call the delete volume RPC when the volume is deleted and the storage driver should implement the code to decommission the volume from the array when that call is made.