Cluster Upgrade Process

In this tutorial we will discuss about Cluster Upgrade Process in Kubernetes.

In the previous tutorial, we will discuss how Kubernetes manages its software releases and how different components have their versions.

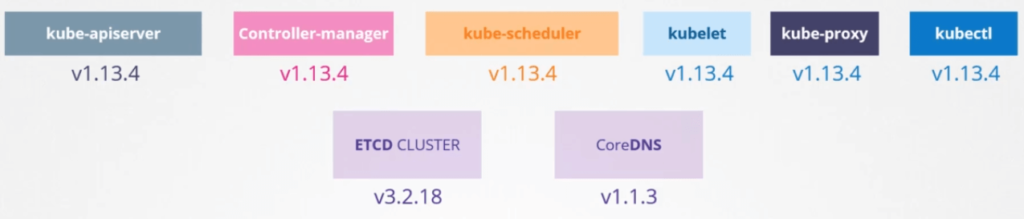

We will keep dependencies on external components like ETCD and CoreDNS aside for now and focus on the core control plane components.

It is mandatory that they all have the same version. Now the components can be in different release versions.

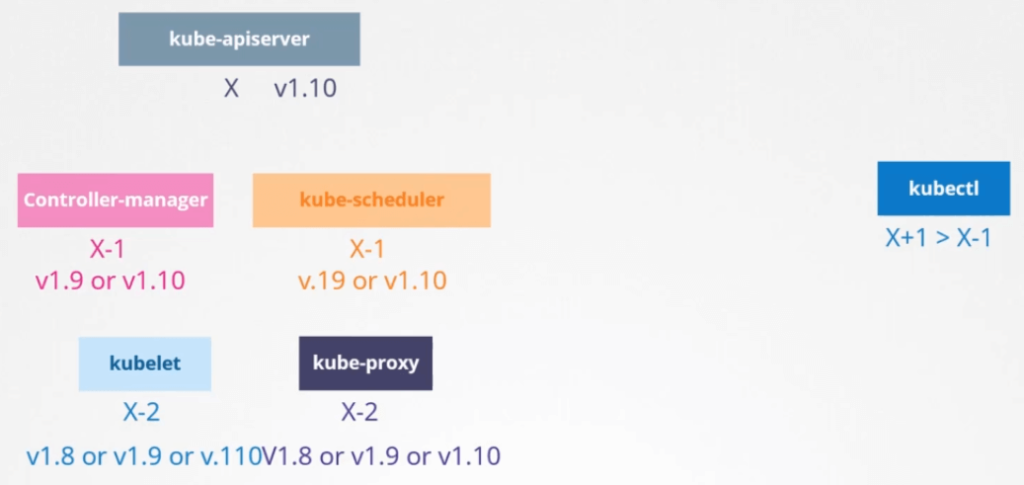

Since the Kube API server is the primary component in the control plane and that is the component that all other components talked to. None of the other components should never be at a higher version than the Kube API server.

The controller manager and scheduler can be at one version lower. So if the Kube API server was at X, control managers and scheduler can be at X-1 and the kubelet and kube-proxy components can be at 2 versions lower X-2.

None of the components could be at higher version than the Kube API server like v1.11.

Now this is not the case with the Kubectl. The Kubectl utility could be v1.11 a version higher than the Kube API server, v1.9 a version lower than the Kube API server or v1.10 same version of the Kube API server.

Now this permissible SKU in versions allows us to carryout live upgrades. We can upgrade component by component if required.

So, when should we upgrade? Let’s say you are at version 1.10 and Kubernetes versions is 1.11 & 1.12. At any time Kubernetes supports only up to recent 3 minor versions.

So, with 1.12 is the latest release Kubernetes supports v1.12, v1.11 and v1.10. Therefore, when v1.13 is released only versions v1.13, v1.12 and v1.11 are supported.

Before the release of v1.13 would be a good time to upgrade your cluster to the next release. So, how do we upgrade? do we upgrade directly from v1.10 to v1.13? Answer is No.

The recommended approach is to upgrade a minor version at a time. v1.10 to v1.11 then v1.11 to v1.12 and then v1.12 to v1.13. The upgrade process depends on how our cluster is configured.

For example, if out cluster is a managed Kubernetes cluster deployed on cloud service provider like Google. Google Kubernetes engine lets you upgrade our cluster easily by just a few clicks.

If we deploy our Kubernetes cluster using tools like Kubeadm then the tool can help you plan and upgrade the cluster.

If we deployed our Kubernetes cluster from scratch, then we manually upgrade the different components of cluster our self. In this tutorial, we will look at options by Kubeadm.

So, we have a cluster with master nodes and worker nodes running in production hosing PODs that serving users.

The nodes and components are at version 1.10. Upgrading the cluster involves 2 major steps.

First, we upgrade our master nodes and then upgrade the worker nodes. While the master nodes being upgraded, the control plane components such as the API server, Scheduler and the controller manager go down briefly.

The master go down doesn’t mean you worker nodes and applications are impacted. All workloads hosted on worker nodes continue to serve users normally.

Since the master is down, all management functions are down. We can’t access the cluster using the Kubectl or other Kubernetes API. We can’t deploy new applications or delete or modify existing ones.

The controller manager also didn’t work. If a POD is fails, a new POD will not be created automatically. But as long as nodes and PODs are up, our applications should be up and users will not be impacted.

Once the upgrade is complete and cluster is backed up, it should function normally.

Now we have the master and the main components had version v1.11 and the worker nodes had version v1.10.

As we discussed earlier, this is a supported configuration. Now it’s time time to upgrade worker nodes. There are different strategies available to upgrade worker nodes.

One is to upgrade all of them at once. But then PODs are down and users are no longer able to access the applications. Once the upgrade is complete, the nodes are backed up, new PODs are scheduled and users can resume access. This is one strategy that requires downtime.

The second strategy is to upgrade one node at a time. So, going back to the state where we have our master upgraded and the nodes waiting to be upgraded.

We first upgrade the first node, where the workloads moved to the second and third node and users are served from there. Once the first node is upgraded and backed up with an update the second node where the workloads moved to first and third nodes and finally the third node where the workloads are shared between first two.

Until we have all the nodes are upgraded to a newer version. We then follow the same procedure to upgrade the nodes from v1.11 to v.12 and then v1.13.

The third strategy would be to add a new node to the cluster. Nodes with newer software version. This is especially convenient if you are in a cloud environment where you can easily provision new nodes and decommission old nodes.

Nodes with the newer software version that can be added to the cluster. Move the workload to the new one and remove the old node until you finally have all the new nodes with the new software versions.

Let’s now how it is done? Say we were to upgrade this cluster from v1.11 to v1.13.

Kubeadm has an upgrade command that helps upgrading clusters. With Kubeadm run the following command to know the information about current version, kubeadm tool version, latest stable version of Kubernetes.

$ kubeadm upgrade plan

This command also lists all the control plane components and their versions and what versions these can be upgraded to. It also tells us that after we upgrading the control plane components, you must manually upgrade the kubelet versions on each node.

Please note that kubeadm doesn’t install or upgrade Kubelets. Finally it provides the commands to upgrade the cluster. Also note that we must upgrade the kubeadm tool itself before you can upgrade the cluster.

The Kubeadm tool also follows the same software version as Kubernetes.

So, we are at v1.11 and we want go to v1.13. But remember that we can only go one minor version at a time. So we first go to v1.12. First upgrade the kubeadm tool using following command

$ sudo apt-get upgrade -y kubeadm=1.12.0-00

Now upgrade the Kubernetes cluster using following command

$ kubeadm upgrade apply v1.12.0

Above command pulls the necessary images and upgrade the cluster components. Once upgrade complete our control plane components are now at v1.12. If you run following command you will still see the master node at v1.11.

$ kubectl get nodes NAME STATUS ROLES AGE VERSION node01 Ready master 2d v1.11.3 node02 Ready <none> 2d v1.11.3 node03 Ready <none> 2d v1.11.3

This is because, in the output of this command, it is showing the versions of the Kubelet on each of the nodes registered with API server and not the version of the API server version itself.

So, the next step is to upgrade the Kubelets. Remember that, depending on our configuration we may or mayn’t have Kubelet running on the master node.

In this case, the cluster deployed with the kubeadm as kubelets on the master nodes which are used to run the control plane components as part of the master node.

So, the next step is to upgrade the kubelet on the master node if we have the kubelet on them. Run the following command to upgrade kubelet

$ sudo apt-get upgrade -y kubelet=1.12.0-00

Once the package is upgraded, restart the kubelet using following command

$ systemctl restart kubelet

Now run the following command to check the version

$ kubectl get nodes NAME STATUS ROLES AGE VERSION node01 Ready master 2d v1.12.0 node02 Ready <none> 2d v1.11.3 node03 Ready <none> 2d v1.11.3

Now the master is upgraded to v1.12. The worker nodes are still at v1.11.

So, next the worker nodes. Let us start one at a time. We need to first move the workloads from the first worker node to other nodes. The following command is used to safely shutdown all PODs from the node and reschedule to the other nodes.

$ kubectl drain node01

Now upgrade the kubeadm and kubelet packages on the worker node as we did in master node.

$ sudo apt-get upgrade -y kubeadm=1.12.0-00 $ sudo apt-get upgrade -y kubelet=1.12.0-00 $ kubeadm upgrade node config --kubelet-version v1.12.0 $ systemctl restart kubelet

The node should now be up with the new software version. However when we drain the node, we actually marked it unschedulable. So we need to unmark it by running the following command.

$ kubectl uncordon node01

The node is now schedulable. But remember that it is not necessary the PODs come back to this node. It is only marked it as schedulable. Only when the PODs are deleted from other nodes or when new PODs are scheduled they really come back to this node.

Similarly we can upgrade other nodes as well. We now all the nodes are upgraded.