Core DNS in Kubernetes

In this tutorial, we are going to discuss about Core DNS in Kubernetes and how Kubernetes implements in the cluster.

In the previous tutorial we saw how you can address a service or POD from another POD. So in this tutorial, we will see how Kubernetes makes that possible.

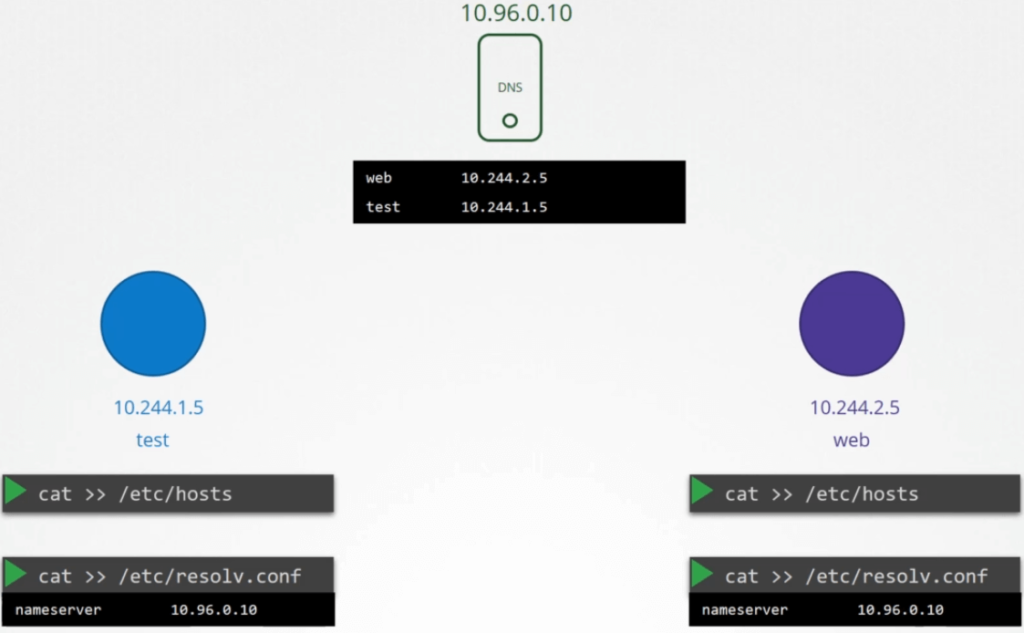

Say you were given two pods with two IP addresses. How would you do it? Based on what we discussed in the prerequisite tutorial on DNS, an easy way to get them to resolve each other is to add an entry into each of their /etc/hosts files.

On the first POD, I would say the second POD web is at 10.244.2.5 and on the second pod I would say the first POD test is at 10.244.1.5.

But of course, when you have 1000s of PODs in the cluster, and 100s of them being created and deleted every minute. So this is not a suitable solution.

Move entries into a central DNS server

So we move these entries into a central DNS server. We then point these PODs to the DNS server by adding an entry into their /etc/resolv.conf file specifying that the nameserver is at the IP address of the DNS server, which happens to be 10.96.0.10 in this case.

So every time a new POD is created, we add a record in the DNS server for that POD. So that other pods can access the new POD, and configure the /etc/resolv.conf file in the POD to the DNS server so that the pod can resolve other PODs in the cluster.

This is kind of how Kubernetes does it. Except that it does not create similar entries for PODs to map pod name to its IP address as we have seen in the previous tutorial.

It does that for services. For PODs it forms host names by replacing dots with dashes in the IP address of the pod.

Kubernetes implements DNS in the same way. It deploys a DNS server within the cluster. Prior to version v1.12 the DNS implemented by Kubernetes was known as kube-dns.

CoreDNS

With Kubernetes version 1.12 the recommended DNS server is CoreDNS. So how is the core DNS setup in the cluster?

The CoreDNS server is deployed as a POD in the kube-system namespace in the Kubernetes cluster. Well they are deployed as two pods for redundancy, as part of a ReplicaSet.

They are actually a replicaset within a deployment. But it doesn’t really matter. We’ll just see CoreDNS as a POD in this tutorial.

This POD runs the coreDNS executable, the same executable that we ran when we deployed CoreDNS ourselves.

CoreDNS Configuration File

CoreDNS requires a configuration file. In our case we used a file named Corefile. So does Kubernetes. It uses a file named Corefile located at /etc/coredns.

$ cat /etc/coredns/Corefile

.:53 {

errors

health

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

ttl 30

}

prometheus :9153

forward . /etc/resolv.conf

cache 30

reload

}Within this file you have a number of plugins configured. Plugins are configured for handling errors, reporting health, monitoring metrics, cache etc.

The plugin that makes CoreDNS work with Kubernetes, is the Kubernetes plugin. And this is where the top level domain name for the cluster is set.

In this case cluster.local. So every record in the coredns DNS server falls under this domain. Within the Kubernetes plugin there are multiple options.

The PODs option you see here, is what is responsible for creating a record for PODs in the cluster. Remember we talked about a record being created for each POD by converting their IPs into a dashed format that’s disabled by default.

But it can be enabled with this entry here. Any record that this DNS server can’t solve, for example say a POD tries to reach www.google.com it is forwarded to the nameserver specified in the coredns pods /etc/resolv.conf file.

The /etc/resolv.conf file is set to use the nameserver from the Kubernetes node. Also note, that this core file is passed into the pod has a ConfigMap object. That way if you need to modify this configuration you can edit the ConfigMap object.

We now have the CoreDNS POD up and running using the appropriate Kubernetes plugin. It watches the Kubernetes cluster for new PODs or services, and every time a POD or a service is created it adds a record for it in its database.

Pods to point to the CoreDNS

Next step is for the PODs to point to the CoreDNS server. What address do the PODs use to reach the DNS server? When we deploy CoreDNS solution, It also creates a service to make it available to other components within a cluster.

The service is named as kube-dns by default. The IP address of this service is configured as nameserver on the PODs.

Now you don’t have to configure this yourself. The DNS configurations on PODs are done by Kubernetes automatically when the PODs are created.

Want to guess which Kubernetes component is responsible for that? The kubelet. If you look at the config file of the kubelet you will see the IP of the DNS server and domain in it.

Once the pods are configured with the right nameserver, you can now resolve other pods and services. You can access the web-service using just web-service, or web-service.default or web-service.default.svc or web-service.default.svc.cluster.local.

$ curl web-service

$ curl web-service.default

$ curl web-service.default.svc

$ curl web-service.default.svc.cluster.localIf you try to manually lookup the web-service using nslookup or the host command web-service command, it will return the fully qualified domain name of the web-service, which happens to be web-service.default.svc.cluster.local.

$ host web-service

web-service.default.svc.cluster.local has address 10.97.206.196But you didn’t ask for that you just set up service. So how did it look up for the full name. It so happens, the resolv.conf file also has a search entry which is set to default.svc.cluster.local as well as svc.cluster.local and cluster.local.

$ cat /etc/resolv.conf

nameserver 10.96.0.10

search default.svc.cluster.local svc.cluster.local cluster.localThis allows you to find the service using any name. web-service or web-service.default or web-service.default.svc.

$ host web-service

web-service.default.svc.cluster.local has address 10.97.206.196

$ host web-service.default

web-service.default.svc.cluster.local has address 10.97.206.196

$ host web-service.default.svc

web-service.default.svc.cluster.local has address 10.97.206.196

$ host web-service.default.svc.cluster.local

web-service.default.svc.cluster.local has address 10.97.206.196However, notice that it only has search entries for service . So you won’t be able to reach a pod the same way.

$ host 10-244-2-5

host 10-244-2-5 not found: 3(NXDOMAIN)For example, you need to specify the full FQDN of the pod to to reach the POD.

$ host 10-244-2-5.default.svc.cluster.local

web-service.default.svc.cluster.local has address 10.97.206.196