PODs

A POD is one or more containers that logically go together. PODs run on nodes. Kubernetes doesn’t deploy containers directly in the worker nodes. The containers are encapsulated into a Kubernetes object known as PODs. A POD is a single instance of a application. It is a smallest object that you can create in a Kubernetes.

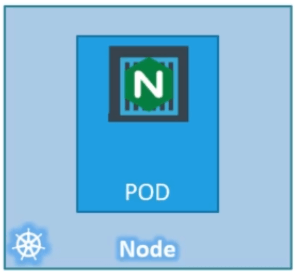

You can see following picture, where you have a single node Kubernetes cluster with a single instance of a application running in a single docker container encapsulated in a POD.

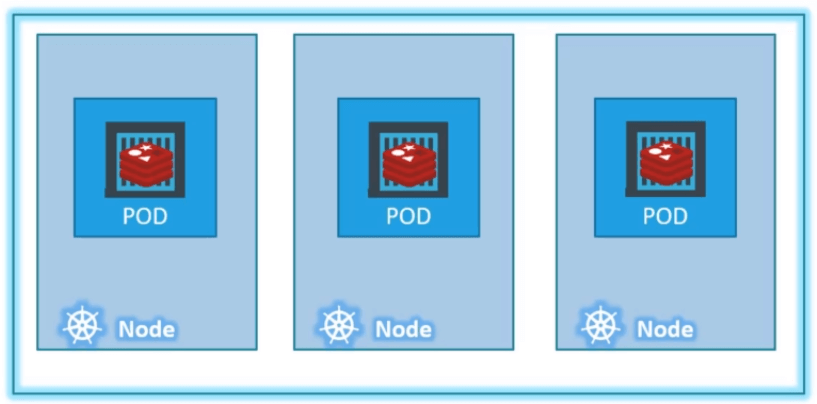

What if the number of users accessing the application increase? Here you need to scale your application. You need to add additional instances of your web application to share the load.

Now where which is spin up additional instances? Do we bring up new container in same POD? Answer is NO. We create a new POD altogether with a new instance of the same application.

As you can see, we now have 2 instances of our web application running on 2 separate PODs on the same Kubernetes system/node.

What if the users further increases and your current node no sufficient capacity. Well then you can always deploys a additional POD on a new node in the cluster.

Note

PODs usually have a one to one relationship with containers running in the application. To scale up, you create new POD. To scale down, you delete existing POD. You don’t add additional containers to an existing POD to scale your application.

Multi Container PODs

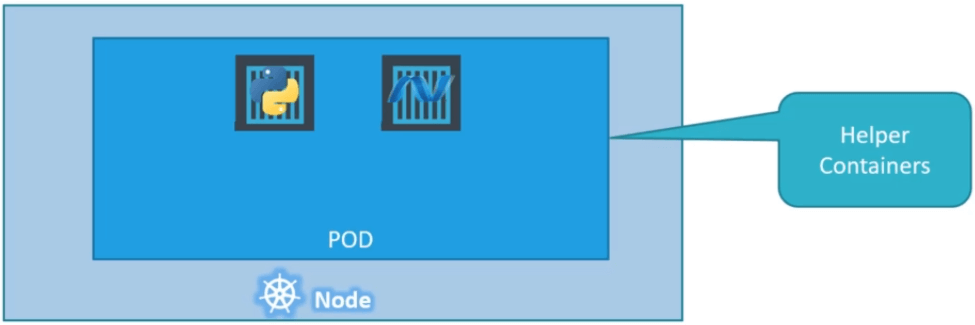

As we know, PODs usually have a one to one relationship with containers running in the application. But are we restricted to have a single container in a single POD? Answer is NO.

A single POD can have multiple containers except for the fact there are usually not multiple containers of the same kind.

As we discussed previously, if our intention was to scale our application then we would need to create additional PODs. But sometimes you might have a scenario we need helper containers that might be doing some kind of supporting tasks for our application such as processing a user data, processing the user upload data etc. In this case, you can have both of the containers part of the same POD. So that when the new application container is created the helper container also created, when it dies the helper container also dies since both are part of same POD.

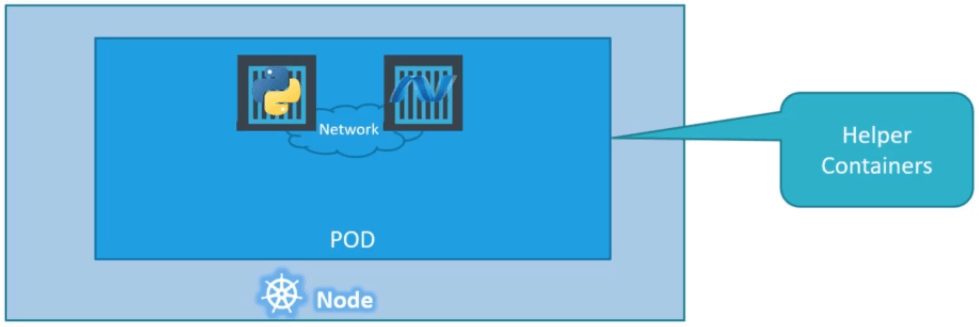

The two containers can also communicate with each other directly by referring each other as localhost since they shared same network space also they shared same storage space as well.

How to deploy a POD

Using kubectl run command we can deploy a POD. First it creates the POD automatically and deploys the instance of the application.

kubectl run nginx --image nginx