Kafka Topic Replication

In this tutorial, we are going to discuss Apache Kafka topic replication and what it implies for your consumers and your producers.

One of the main reasons for Kafka’s popularity is the resilience it offers in the face of broker failures. Machines fail, and often we cannot predict when that is going to happen or prevent it. Kafka is designed with replication as a core feature to withstand these failures while maintaining uptime and data accuracy.

So topics in Kafka, when you’re doing stuff on your own machine, they can have a replication factor of one, but usually, when you are in production that means you’re having a real Kafka cluster, you need to set a replication factor greater than one, usually between 2 and 3 and most commonly at 3. So that way, if a broker is down, that means a Kafka server is stopped for maintenance or for a technical issue. Then another Kafka broker still has a copy of the data to serve and receive.

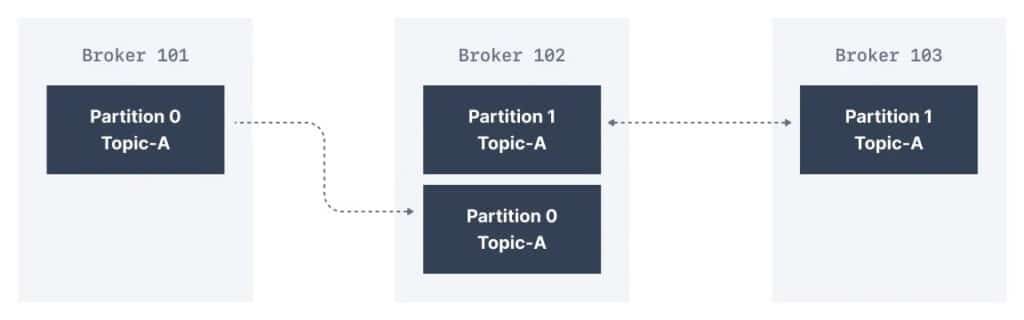

So let’s take an example to understand this better. We have Topic-A, which has 2 partitions and a replication factor of 2. So we have 3 Kafka brokers, and we’re going to place partition 0 of Topic-A onto broker 101, and partition 1 of Topic-A onto broker 102. So this is the initial.

And then because we have a replication factor of 2 then we’re going to have a copy of partition 0 onto broker 102 with a replication mechanism, and a copy of partition 1 onto broker 103 with again, a replication mechanism.

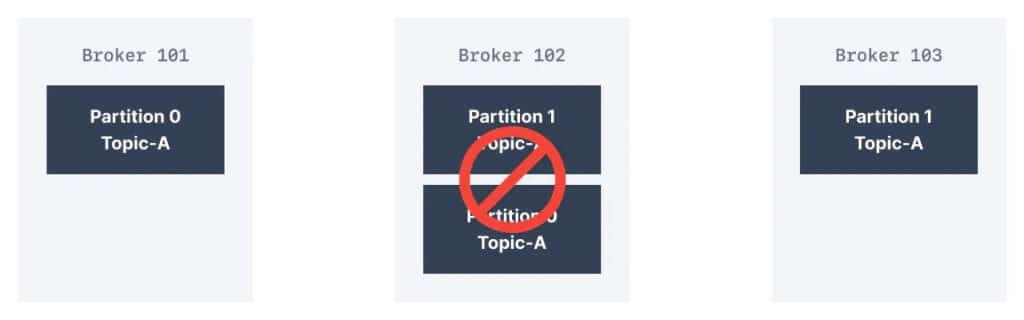

So as we can see here, we have 4 units of data because we have 2 partitions, and a replication factor of 2. We can see that brokers are replicating data from other brokers. So what does that mean? What if we lose broker 102?

As we can see, we have brokers 101, and 103 still up and they can still serve the data. So partition 0 and partition 1 are still available within our cluster and this is why we have a replication factor.

So in the case of a replication factor of 2, to make it very simple, you can lose one broker and be fine.

What are Kafka Partitions Leader and Replicas?

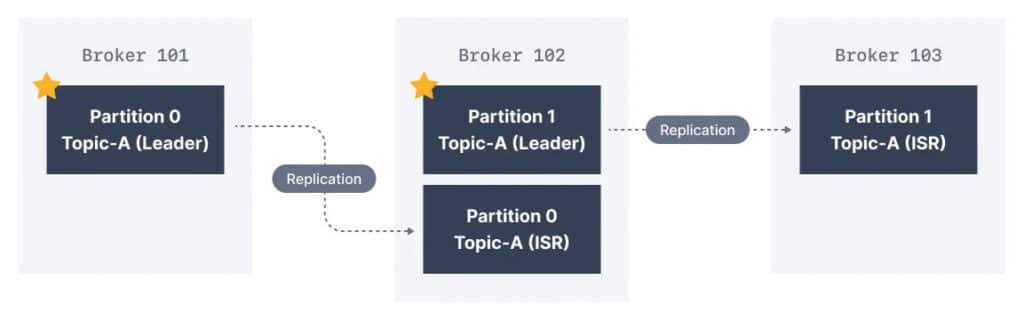

For a given topic partition, one Kafka broker is designated by the cluster to be responsible for sending and receiving data to clients. That broker is known as the leader broker of that topic partition. Any other broker that is storing replicated data for that partition is referred to as a replica. Therefore, each partition has one leader and multiple replicas.

So if we go back to this diagram we had from before, I added a little star on the leader of each partition.

We can see that broker 101 is the leader of partition 0, and broker 102 is the leader of partition 1, but broker 102 is a replica of partition 0, and broker 103 is a replica of partition 1. So the other brokers replicate the data. If the data is replicated fast enough then each replica is going to be called an ISR.

What are In-Sync Replicas (ISR)?

An ISR is a replica that is up to date with the leader broker for a partition. Any replica that is not up to date with the leader is out of sync. Here we have Broker 101 as Partition 0 leader and Broker 102 as the leader of Partition 1. Broker 102 is a replica for Partition 0 and Broker 103 is a replica for Partition 1. If the leader broker were to fail, one of the replicas will be elected as the new partition leader by an election.

So this is very important because there is a very important aspect of leaders.

Default producer and consumer behaviour with leaders

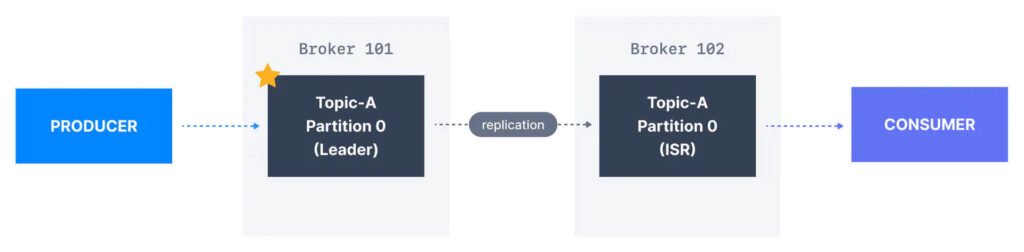

So by default, and this is the default behavior with leaders, your producers are going to only write into the leader broker for a partition.

So if the producer knows it wants to send data into partition 0, as we’ve seen from the previous mechanism, and we have a leader and an ISR then the producer knows that it should only send the data to the broker that is the leader of that partition. And that is a very important Kafka feature.

And the Kafka consumers, they’re going to read that default only from the leader of a partition. So that means that the consumer will only request data from the leader broker 101. That means that broker 102 in the previous example is a replica just for the sake of replicating data, and in case the broker 101 goes down then it can become the new leader, and serve the data for the producer and the consumer. That is the default behavior.

Kafka Consumers Replica Fetching

There is a new feature called the Kafka Consumer Replica Fetching, which happened as part of Kafka 2.4+, which allows consumer to read from the closest replica. So we have the broker 101, which is leader of partition 0 receiving the data from the producer is going to replicate data into the ISR partition 0 of broker 102. And then it’s possible for our consumer to read from the replica itself. Why? Well, this may help to improve latency, because maybe the consumer is really close to broker 102. And also maybe it’s going to help decrease network cost, if using the cloud, because if things are in the same data center then you have little to no cost.