Kafka Consumers

In this tutorial, we are going to discuss Apache Kafka Consumers and Message deserializations. So we’ve seen how to produce data into our topic. But now we need to read data from that topic. And for this, we’re going to use consumers.

Applications that read data from Kafka topics are known as consumers. Applications integrate a Kafka client library to read from Apache Kafka. Excellent client libraries exist for almost all programming languages that are popular today including Python, Java, Go, and others.

Kafka Consumers implement the pull model, which means that consumers are going to request data from the Kafka brokers, and the servers, and then they will get a response back. It’s not the Kafka broker pushing data to the consumers. It’s a pull model.

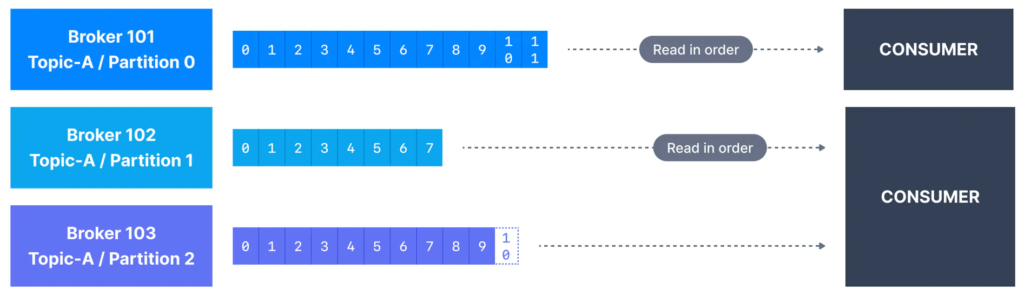

Kafka Consumers can read from one or more partitions at a time in Apache Kafka, and data is read in order within each partition as shown below.

So we have an example with 3 topic partitions that contain data, and then one consumer may want to read from topic A partition 0 and is going to read the data. And another consumer may choose to read for more than one topic partition. So this may choose to read data again from partition 1 and partition 2.

So the Kafka consumers when they need to read data from a partition, will automatically know which broker, and which Kafka server to read from. And in case a broker has a failure, the consumers are again very very smart. They will know how to recover from this. Now the data of the read data is read for all these partitions is going to be read in order from low to higher offset.

So 0, 1, 2, 3, and so on within each partition. So the first consumer is going to read data in order for topic A partition 0, from the offset 0 all the way to offset 11. Same for consumer 2, which is going to be reading data in order for partition 1, and in order for partition 2 but remember, there are no ordering guarantees across partition 1 and partition 2 because they are different partitions. The only ordering we have is within each partition.

Kafka Message Deserializers

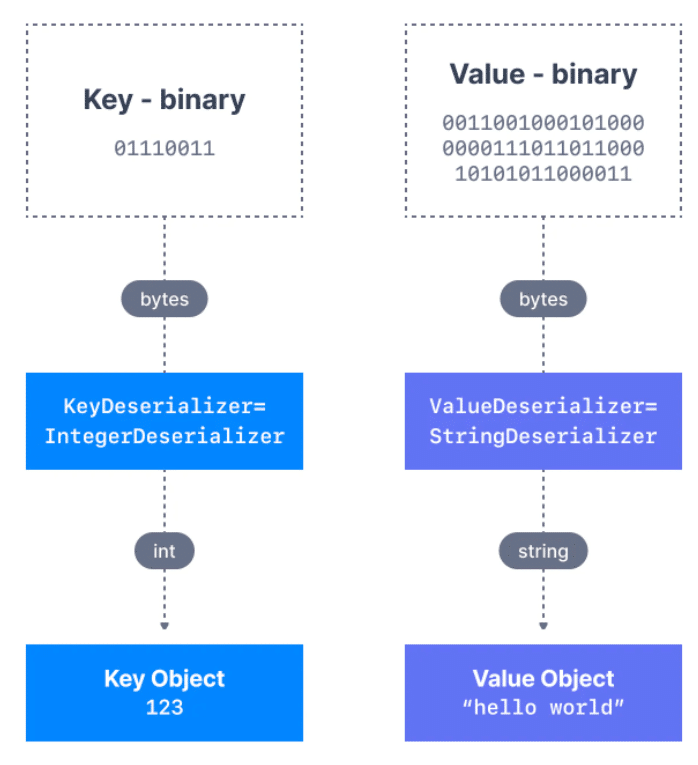

As we have seen before, the data sent by the Kafka producers is serialized. This means that the data received by the Kafka consumers must be correctly deserialized in order to be useful within your application.

So now that Kafka consumers read messages, they need to transform these bytes that they receive from Kafka into objects or data. So we have the key that is a binary format and a value that is a binary format that corresponds to the data in your Kafka message. And then we need to transform them to read them and put them into an object that our programming language can use.

So the consumer has to know in advance what is the format of your messages? And this consumer in an instance knows that my key is an integer and therefore is going to use an integer deserializer to transform my key which is bytes into an integer. And then the key object is going to be back to 123. Same for the value, we know that we need a deserializer of type string because this is what we expect to be in this Kafka topic, and therefore, this deserializer is going to take bytes as an input and then create a string out of it. So we’re going to get back our value object, hello world.

So obviously, deserializes are being bundled with apache Kafka and they can be used by Kafka consumers. So it could be for strings including JSON, Integer, Float, Avro, Protobuf, and so on.

And as we see, we have a process of serializer at the producer side and deserializer at the consumer side. Data being consumed must be deserialized in the same format it was serialized in. For example:

- if the producer serialized a

StringusingStringSerializer, the consumer must deserialize it usingStringDeserializer - if the producer serialized an

IntegerusingIntegerSerializer, the consumer must deserialize it usingIntegerDeserializer

The serialization and deserialization format of a topic must not change during a topic lifecycle. If you intend to switch a topic data format (for example from JSON to Avro), it is considered best practice to create a new topic and migrate your applications to leverage that new topic.

Failure to correctly deserialize may cause crashes or inconsistent data being fed to the downstream processing applications. This can be tough to debug, so it is best to think about it as you’re writing your code the first time.