Docker Engine

In this tutorial, we will discuss about docker engine. Docker Engine is the underlying client-server technology that builds and runs containers using Docker’s components and services.

When people refer to Docker, they mean either Docker Engine — which comprises the Docker daemon, a REST API and the CLI that talks to the Docker daemon through the API

Now we will take a deeper look at dockers architecture. How it actually runs applications in isolated containers and how it works under the hood.

Docker engine as we have learned before is simply referred to a host with docker installed on it. When you install docker on Linux host you’re actually installing 3 different components.

- Docker daemon

- REST API

- Docker CLI

Docker daemon

The docker daemon is a back ground process that manages docker objects such as the images, containers, volumes and networks.

REST API

The docker REST API server is the API interface that programs can use to talk to the daemon and provide instructions. You can create your own tools using this REST API.

Docker CLI

Docker CLI is nothing but the command line interface that we have been using until now to perform actions such as running a container, stopping containers, creating images, destroying images etc.

It uses the REST API to interact with the docker daemon.

Something to note here is that the docker CLI need not necessarily be on the same host. It could be on another system like a laptop and can still work with a remote docker engine.

Simply use the -H option on the docker command and specify the remote docker engine address and the port as showing below.

$ docker -H=192.168.56.125:2375 run nginx

Containerization

Now lets try and understand how exactly are applications containerized in docker. How does it work under the hood?

Docker uses namespace to isolate workspace, process id’s, network inter process communication, mounts and Unix time sharing systems are created in their own namespace thereby providing isolation between containers.

Name space

Lets take a look at one of the namespace isolation technique process id namespace.

Whenever a Linux system boots up, it starts with just one process with process id of 1. This is the root process and kicks off all the other processes in the system.

By the time the system boots up completely, we have a handful of processes running. This can be seen by running the ps command to list all the running processes.

The process id’s are unique and two processes cannot have the same process id.

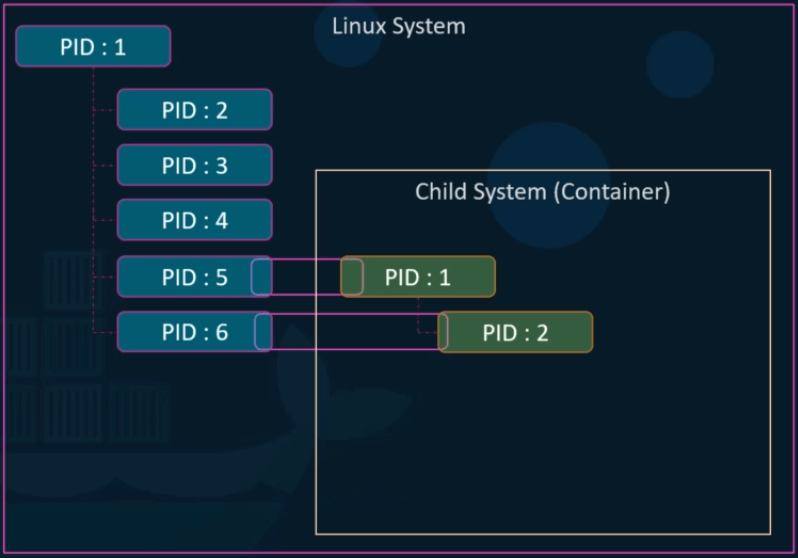

Now if we were to create a container which is basically like a child system within the current system. The child system needs to think that it is an independent system on its own and it has set of processes originating from a root process with a process id of 1. But we know that there is no hard isolation between the containers and the underlying host.

So the processes running inside the container are in fact processes running on the underlying host. And so two processes cannot have the same process id of 1. This is where namespace is come into play.

With process id name spaces, each process can have multiple process id’s associated with it. For example, when the processes start in the container, it’s actually just another set of processes on the base Linux system and it gets the next available process id. In this case 5 and 6.

However they also get another process id starting with PID 1 in the container namespace, which is only visible inside the container. So the container things that it has its own root process tree and so it is an independent system.

So how does that relate to an actual system? How do you see this on a host?

Simple example

Let’s say I were to run an nginx server as a container. We know that the nginx and its container run an nginx service.

If we were to list all the services inside the docker container, we see that the nginx service running with a process id of 1. This is the process id of the service inside of the container namespace.

If we list the services on the docker host, we will see the same service but with a different process id that indicates that all processes are in fact running on the same host but separated into their own containers using namespaces.

cgroups

So we learn that the underlying docker host as well as the containers shared the same system resources such as CPU and memory.

How much of the resources are dedicated to the host and the containers and how does docker manage and share the resources between the containers?

By default, there is no restriction as to how much of a resource a container can use and hence a container may end up utilizing all of the resources on the underlying host.

But there is a way to restrict the amount of CPU or memory a container can use. Docker uses cgroups or control groups to restrict the amount of hardware resources allocated to each container.

This can be done by providing the –cpus option to the docker run command. Providing the value of .5 will ensure that the container does not take up more than 50% of the host CPU at any given time.

$ docker run --cpus=.5 nginx

The same goes with memory. Setting a value of 200m to the –memory option limits the amount of memory the container can use to 200 megabytes.

$ docker run --memory=200m nginx