HBase Architecture

HBase is an open-source, column-oriented distributed database system in Hadoop environment. Apache HBase is needed for real-time Big Data applications. The tables present in HBase consists of billions of rows having millions of columns. HBase is built for low latency operations, which is having some specific features compared to traditional relational models.

Storage Mechanism in Hbase

HBase is a column-oriented database and data is stored in tables. The tables are sorted by RowId. As shown below, HBase has RowId, which is the collection of several column families that are present in the table. The column families that are present in the schema are key-value pairs. If we observe in detail each column family having a multiple numbers of columns. The column values stored in to disk memory. Each cell of the table has its own Meta data like time stamp and other information. Following are the key terms representing table schema

- Table: Collection of rows present.

- Row: Collection of column families.

- Column Family: Collection of columns.

- Column: Collection of key-value pairs.

- Namespace: Logical grouping of tables.

- Cell: A {row, column, version} tuple exactly specifies a cell definition in HBase.

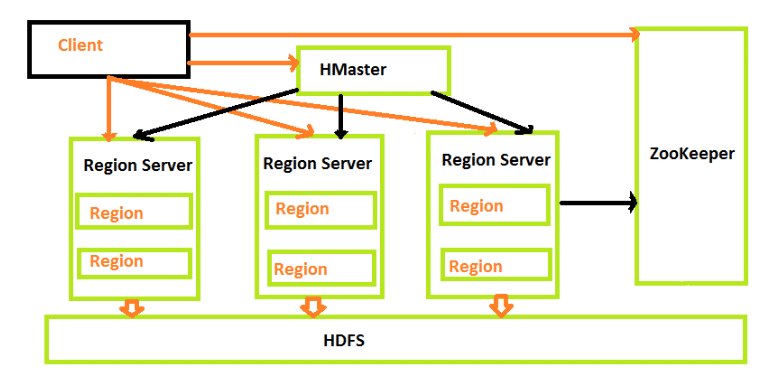

Apache HBase architecture consists mainly of four components

- HMaster

- HRegionserver

- HRegions

- Zookeeper

HMaster

HMaster is the implementation of Master server in HBase architecture. It acts like monitoring agent to monitor all Region Server instances present in the cluster and acts as an interface for all the metadata changes. In a distributed cluster environment, Master runs on NameNode. Master runs several background threads.

Roles of HMaster

- HMaster assigns regions to region servers and takes the help of Apache ZooKeeper for this task.

- HMaster provides admin performance and distributes services to different region servers.

- HMaster Plays a vital role in terms of performance and maintaining nodes in the cluster.

- HMaster has the features like controlling load balancing and failover to handle the load over nodes present in the cluster.

- When a client wants to change any schema and to change any Metadata operations, HMaster takes responsibility for these operations

- Maintains the state of the cluster by negotiating the load balancing.

The client communicates in a bi-directional way with both HMaster and ZooKeeper. For read and write operations, it directly contacts with HRegion servers. HMaster assigns regions to region servers and in turn check the health status of region servers. In entire architecture, we have multiple region servers. Hlog present in region servers which are going to store all the log files.

HRegions Servers

When Region Server receives writes and read requests from the client, it assigns the request to a specific region, where actual column family resides. However, the client can directly contact with HRegion servers, there is no need of HMaster mandatory permission to the client regarding communication with HRegion servers. The client requires HMaster help when operations related to metadata and schema changes are required.

HRegionServer is the Region Server implementation. It is responsible for serving and managing regions or data that is present in distributed cluster. The region servers run on Data Nodes present in the Hadoop cluster. HMaster can get into contact with multiple HRegion servers and performs the following functions.

- Hosting and managing regions

- Splitting regions automatically

- Handling read and writes requests

- Communicating with the client directly

HRegions

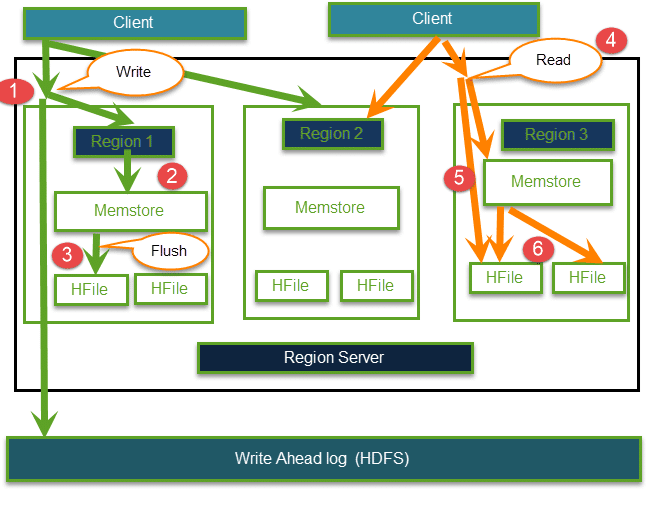

HRegions are the basic building elements of HBase cluster that consists of the distribution of tables and are comprised of Column families. It contains multiple stores, one for each column family. It consists of mainly two components, which are Memstore and Hfile.

Data flow in HBase

Following figure depicts data flow in HBase.

Write and Read operations

- Client wants to write data and in turn first communicates with Regions server and then regions

- Regions contacting memstore for storing associated with the column family

- First data stores into Memstore, where the data is sorted and after that it flushes into HFile. The main reason for using Memstore is to store data in Distributed file system based on Row Key. Memstore will be placed in Region server main memory while HFiles are written into HDFS.

- Client wants to read data from Regions

- In turn Client can have direct access to Mem store, and it can request for data.

- Client approaches HFiles to get the data. The data are fetched and retrieved by the Client.

ZooKeeper

In Hbase, Zookeeper is a centralized monitoring server which maintains configuration information and provides distributed synchronization. Distributed synchronization is to access the distributed applications running across the cluster with the responsibility of providing coordination services between nodes. If the client wants to communicate with regions, the servers client has to approach ZooKeeper first. It is an open source project, and it provides so many important services.

ZooKeeper Services

- ZooKeeper maintains Configuration information

- It provides distributed synchronization

- Client Communication establishment with region servers

- To track server failure and network partitions

- ZooKeeper Provides ephemeral nodes for which represent different region servers

- Master servers usability of ephemeral nodes for discovering available servers in the cluster.

Master and HBase slave nodes ( region servers) registered themselves with ZooKeeper. The client needs access to ZooKeeper quorum configuration to connect with master and region servers. During a failure of nodes that present in HBase cluster, ZKquoram will trigger error messages, and it starts to repair the failed nodes.

HDFS

HDFS is Hadoop distributed file system, as the name implies it provides distributed environment for the storage and it is a file system designed in a way to run on commodity hardware. It stores each file in multiple blocks and to maintain fault tolerance, the blocks are replicated across Hadoop cluster. HDFS provides a high degree of fault –tolerance and runs on cheap commodity hardware. By adding nodes to the cluster and performing processing & storing by using the cheap commodity hardware, it will give client better results as compared to existing one.

Here the data stored in each block replicates into 3 nodes any in case when any node goes down there will be no loss of data, it will have proper backup recovery mechanism. HDFS get in contact with the HBase components and stores large amount of data in distributed manner.