Microservices Data Management

In this tutorial, we are going to discuss Microservices Data Management in order to understand data considerations for microservices. We will use this pattern and practice when designing e-commerce microservice architecture.

Microservices Data Management is a really important topic because since we are in distributed systems, we should have a strategy to handle data in several distributed servers.

Every microservice has its own data, so data integrity and data consistency should consider very carefully.

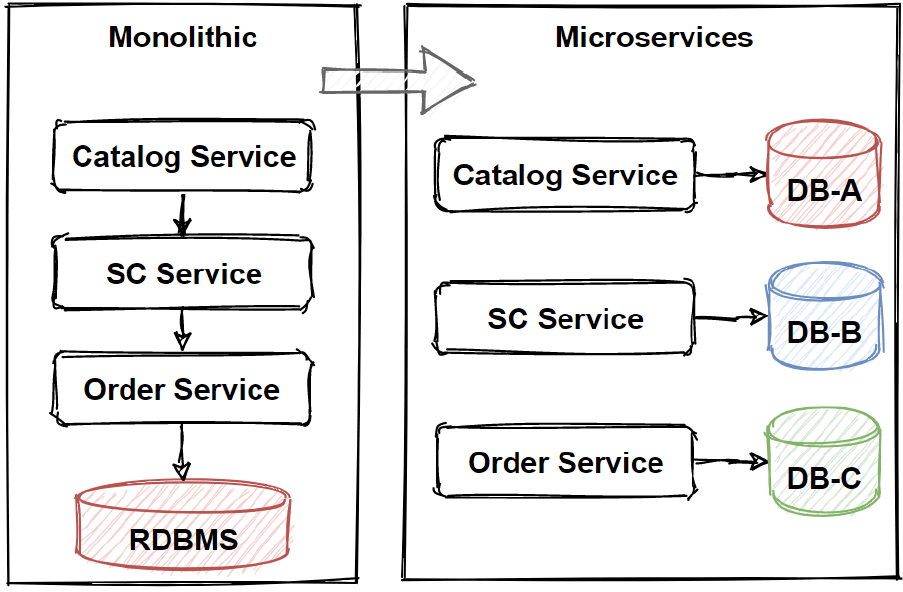

The main principle of microservices data management is that each microservices should manage its own data, we can call this principle a database-per-service. So that means microservices should not share databases with each other. Instead of that, each service should be responsible its own database, which other services cannot access directly. It should share data over the application microservices using Rest APIs.

The reason for this isolation is to break the unnecessary dependencies between microservices. If there is an update on 1 microservices database schema, the update should not be directly affected other microservices, even other microservices should not aware of database changes. By isolating each service’s databases, we can limit the scope of changes on microservices when any database schema changes happened.

Also this way, we can pick different databases as per microservices which database can pick the best option. We call this “polyglot persistence” principle.

Polyglot Persistence Microservices

Of course, these polyglot persistence principles and separating databases come with really heavy Challenges.

Since we have to work on distributed systems, managing data is need a strategy. The main Challenges are that Duplicated or partitioned data can make problems of data integrity and data consistency.

As you remember that we saw data consistency levels which’s are Strict and Eventual Consistency. So we should decide what is our data consistency level before designing microservices polyglot databases.

Traditional data modeling is using 1 big database and Every entity table is included in 1 database schema in monolithic applications.

In this way, data can not be duplicated and there would not be any data consistency problems due to strict database transaction management.

But In a microservices architecture, we have to welcome duplicate data and eventual consistency of our entity tables.

So how we can welcome duplication and un-consistent data?

First of all, we should accept eventual consistency data in our microservices where it is possible. And we should define our consistency requirements before designing the system and if we need strong consistency and ACID transactions, we should follow traditional approaches. But except for these conditional requirements, we should always try to follow eventual consistency data in microservices.

Another way to welcome these features is using an event-driven architecture style. With event-driven architecture style, microservices publish an event when any changes happened on the data model, so the subscriber microservices consume and process events afterward in an eventual consistency model.

For example, a consumer microservice can subscribe to events in order to create a materialized view database that is more suitable for querying data. So separating databases in microservices gives us the ability to scale independently and able to avoid single-point-of-failure of bottleneck databases.

So we should evolve our architecture by applying different Microservices Data Patterns in order to accommodate business adaptations faster time-to-market and handle larger requests.