Placement Groups

In this tutorial, we are going to explore the detailed knowledge of different types of EC2 placement groups offered by AWS. In AWS, Placement Groups are a way to control how EC2 instances are placed on underlying hardware within an AWS Region or Availability Zone.

Placement Groups are particularly useful when you need high availability, low-latency network performance, or the ability to run tightly-coupled compute clusters.

When several EC2 instances are launched, EC2 offers that instances are distributed physically such that it can reduce the number of system crashes, thus offering high availability. However, this can be troublesome when instances require low-latency communication. Imagine a group of instances that require constant low-latency and high throughput communication to offer services to the users, and each instance is deployed on a different physical structure in an AZ. To cater to such issues, Amazon EC2 also offers placement groups.

A placement group allows control of the deployment of interdependent instances in a certain way across the underlying infrastructure. Placement groups are purposely designed to meet our workload requirements. AWS offers three types of placement groups: Cluster, Spread, and Partition. Each has unique benefits depending on your application’s performance and availability requirements.

Let’s take a deeper look into different placement strategies and their benefits.

Types of placement groups

EC2 offers different placement groups to cater to various application needs related to performance, availability, and fault tolerance. Each placement group offers flexibility to arrange instances as per the needs. Let’s take a look at them in detail.

1. Cluster placement group

The cluster placement group is a logical grouping of interrelated instances to achieve the best throughput and low latency rate possible within an Availability Zone. Instances in the cluster placement group may belong to a single VPC or between peered VPCs in the same region.

The cluster placement group is recommended for applications that require high-speed throughput of up to 25 Gbps for intercommunication of instances and 5 Gbps of network speed to internet or AWS Direct Connect. Complex computational problems, live streaming, cosmology models, and network-bound applications that require HPC instance types are optimal for this arrangement.

It is important to note that not all the instance types thrive in such placement; for example, instance families like t2, Mac1, and M7i-flex are not compatible with cluster placement arrangements. Similarly, the maximum throughput between two instances is limited by the slower instances of the two. We can launch different types of instances in the cluster placement group. However, it is recommended to use network-enhanced instances as they offer up to 10 Gbps of single stream traffic flow.

- Purpose: Provides low-latency and high-throughput connectivity between instances by grouping them closely together on the same hardware.

- Best For: Applications that require high-performance computing (HPC), massive data processing tasks, or applications that need high network throughput, like machine learning, analytics, or high-speed trading.

- Characteristics:

- Low Latency, High Throughput: Instances in a cluster placement group are physically close to each other, allowing high-speed communication between them.

- Instance Types: Only certain instance types (like compute-optimized, GPU, or high memory) are recommended for cluster placement groups.

- Single AZ: Instances within a cluster placement group must be in the same Availability Zone, which can increase vulnerability to zone-level failures.

2. Partition placement group

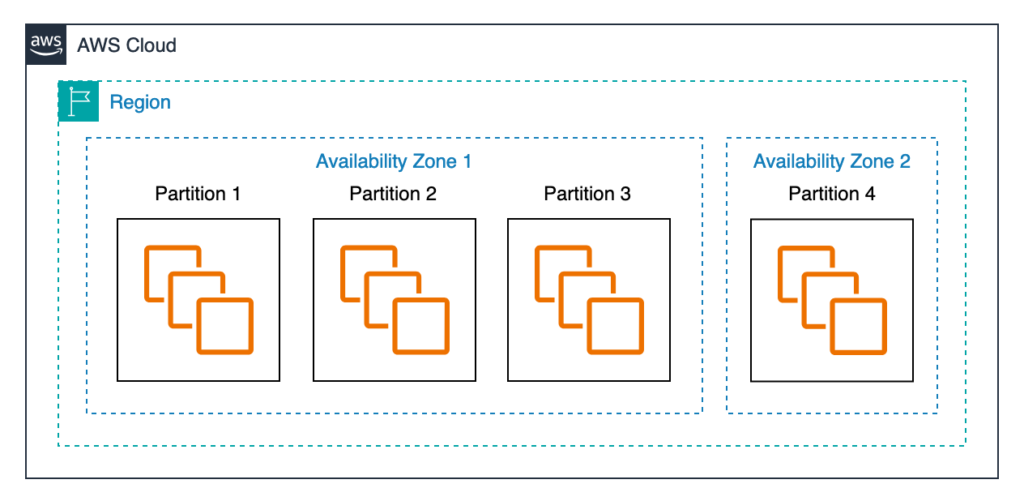

The cluster placement group is designed to launch interconnected instances in close physical proximity to each other on the same infrastructure. The interconnected instances may fail due to correlated hardware failure, thus resulting in application failure. Partition placement group helps us further logically divide the placement group into partitions, and each partition has its own rack. Each rack has its network and power source, so no two partitions suffer from correlated hardware issues. Partition placement group allows to place partitions in different Availability Zones under the same region.

Partition placement groups are used for large distributed computing, such as Big data stores that require distributed and repeated workloads. The partition placement group allows us to see the instances in each partition, enabling Hadoop or Cassandra topology to configure properly.

Partition placement group allows us to use up to seven partitions per AZ. EC2 tries to evenly distribute the instances across all the partitions; however, it does not guarantee an even distribution. A partition placement with dedicated instances can have up to 2 partitions maximum. Similarly, Capacity Reservations do not reserve capacity in the case of partition placement groups.

- Purpose: Offers a balance between fault tolerance and low-latency, especially for distributed or large-scale workloads that require distinct fault boundaries.

- Best For: Big data applications like Hadoop, Cassandra, or HBase, which can tolerate partial failures but benefit from fault isolation between partitions.

- Characteristics:

- Partitions: Divides instances into partitions (up to 7 per Availability Zone), with each partition isolated from others at the hardware level.

- Large Scale: Supports hundreds of instances within each group.

- Fault Isolation: Each partition is isolated from others in terms of hardware failure, so if one partition fails, others are unaffected.

3. Spread placement group

Partition placement groups still have interconnected instances on the same rack, sharing a power source. That means failure of power in the rack would cause all the instances in that rack to fail. Businesses with an application with a small number of critical instances would like to avoid a placement group with the risk of simultaneous failure. Spread placement group is the placement of instances such that each instance has its rack. The Spread placement group allows each instance on a different rack, therefore suitable for different instance types or launching times.

The maximum number of running instances in a spread placement group per Availability Zone can not exceed seven; however, multiple AZs can be used in a region to launch instances. Similar to the partition placement group, Capacity Reservations do not reserve capacity in the case of the spread placement group. Unlike the partition placement group, the spread placement group does not support dedicated instances.

- Purpose: Ensures high availability by spreading instances across underlying hardware in a way that no two instances in the group share the same hardware.

- Best For: Applications that require isolation for fault tolerance and high availability, such as critical microservices or a small number of instances that need to be highly available.

- Characteristics:

- Isolation of Instances: Instances are placed on different hardware, which reduces the risk of simultaneous hardware failure.

- Instance Limit: A spread placement group can span multiple Availability Zones, but it supports a maximum of seven running instances per Availability Zone.

- Low-Latency Requirement: Unlike cluster placement groups, spread placement groups prioritize fault tolerance over low latency.

Key Benefits of Placement Groups

- Optimized Network Performance: Cluster placement groups allow low-latency, high-throughput networking, which is essential for applications with demanding network performance needs.

- Fault Isolation: Spread and partition placement groups help isolate faults by distributing instances across different hardware, reducing the risk of correlated failures.

- Scalability: Placement groups allow applications to scale up or maintain availability depending on their needs, helping you meet specific SLAs.

Choosing the Right Placement Group

- Cluster: Best for tightly-coupled, latency-sensitive applications with high throughput needs in a single AZ.

- Spread: Ideal for critical applications where individual instances need high availability and must be isolated to avoid hardware failure impacts.

- Partition: Suitable for distributed applications that handle large data sets, allowing workloads to continue operating even if one partition fails.

Placement groups are valuable for optimizing network performance, availability, and scalability in EC2 deployments, and choosing the right one depends on the balance of performance and fault tolerance your application needs.

That’s all about detailed knowledge of different types of EC2 placement groups offered by AWS. If you have any queries or feedback, please write us at contact@waytoeasylearn.com. Enjoy learning, Enjoy AWS Tutorials.!!