Hadoop Introduction

There are lots of technologies to solve the problem of Big Data Storage and processing. Such technologies are Apache Hadoop, Apache Spark, Apache Flink, etc.

Apache Hadoop

Hadoop is an Open source, Scalable, and Fault tolerant framework written in Java. It is provided by Apache Software Foundation to process and analyze very huge volume of data. This framework allows to store and process the big data in a distributed environment across clusters of computers using simple programming models. It is written in java and currently used by Google, Facebook, Twitter, Yahoo, LinkedIn etc.

Hadoop efficiently processes large volumes of data on a cluster of commodity hardware. Hadoop is not only a storage system but is a platform for large data storage as well as processing. Most of Hadoop code is written by Yahoo, IBM, Facebook, Cloudera.

What is Open Source Project?

Open source project means it is freely available and we can even change its source code as per the requirements. If certain functionality does not fulfill your need then you can change it according to your need.

Hadoop provides an efficient framework for running jobs on multiple nodes of clusters. Cluster means a group of systems connected via LAN. Apache Hadoop provides parallel processing of data as it works on multiple machines simultaneously. By getting inspiration from Google, which has written a paper about the technologies. Hadoop is inspired by Google’s Map-Reduce programming model as well as its file system (Google File System (GFS)). As Hadoop was originally written for the Nutch Search Engine project. When Doug Cutting and his team were working on it but very soon, it became a top-level project due to its huge popularity.

Hadoop is an open source framework written in Java. But this does not mean you can code only in Java. You can code in C, C++, Perl, python, ruby etc. You can code in any language but it will be more good to code in java as you will have lower level control of the code. It efficiently processes large volumes of data on a cluster of commodity hardware. Hadoop is for processing of huge volume of data. Commodity hardware is the low-end hardware, they are cheap devices which are very economical. Hence, Hadoop is very economic. Hadoop can be setup on a single machine, but it shows its real power with a cluster of machines. We can scale it to thousand nodes on the fly i.e., without any downtime. Therefore, we need not make any system down to add more systems in the cluster.

Why Hadoop?

Apache Hadoop is not only a storage system but is a platform for data storage as well as processing. It is scalable (as we can add more nodes on the fly), Fault tolerant (Even if nodes go down, data processed by another node) and open source (we can change its source code as per the requirements).

Following characteristics and features of Hadoop make it a unique platform

1. Open-source

Apache Hadoop is an open source project. It means its code can be modified according to our business requirements.

2. Distributed Processing

In HDFS all the features are achieved via distributed storage and replication. In HDFS data is stored in distributed manner across the nodes in HDFS cluster. In HDFS data is divided into blocks and is stored on the nodes present in HDFS cluster. And then replicas of each and every block are created and stored on other nodes present in the cluster. So, if a single machine in the cluster gets crashed we can easily access our data from the other nodes which contain its replica.

3. Fault Tolerance

Issues in legacy systems

In legacy systems like RDBMS, all the read and write operation performed by the user, were done on a single machine. And if due to some unfavorable conditions like machine failure, RAM Crash, Hard-disk failure, power down, etc. the users have to wait until the issue is manually corrected. So, at the time of machine crashing or failure, the user cannot access their data until the issue in the machine gets recovered and becomes available for the user. Also, in legacy systems we can store data in the range of GBs only. So, in order to increase the data storage capacity, one has to buy a new server machine. Hence to store a huge amount of data one has to buy a number of server machines, due to which the cost becomes very expensive.

Fault tolerance achieved in Hadoop

Fault tolerance is the property that enables a system to continue operating properly in the event of the failure of (or one or more faults within) some of its components. Fault tolerance in HDFS refers to the working strength of a system in unfavorable conditions and how that system can handle such situations. HDFS is highly fault-tolerant, in HDFS data is divided into blocks and multiple copies of blocks are created on different machines in the cluster (this replica creation is configurable). So whenever if any machine in the cluster goes down, then a client can easily access their data from the other machine which contains the same copy of data blocks. HDFS also maintains the replication factor by creating a replica of blocks of data on another rack. Hence if suddenly a machine fails, then a user can access data from other slaves present in another rack.

One simple Example

Suppose there is a user data named FILE. This data FILE is divided in into blocks say B1, B2, B3 and send to Master. Now master sends these blocks to the slaves say S1, S2, and S3. Now slaves create replica of these blocks to the other slaves present in the cluster say S4, S5 and S6. Hence multiple copies of blocks are created on slaves. Say S1 contains B1 and B2, S2 contains B2 and B3, S3 contains B3 and B1, S4 contains B2 and B3, S5 contains B3 and B1, S6 contains B1 and B2. Now if due to some reasons slave S4 gets crashed. Hence data present in S4 was B2 and B3 become unavailable. But we don’t have to worry because we can get the blocks B2 and B3 from another slave S2. Hence in unfavorable conditions also our data doesn’t get lost. Hence HDFS is highly fault tolerant.

4. Data Reliability

HDFS is a distributed file system which provides reliable data storage. HDFS can store data in the range of 100s of petabytes. It stores data reliably on a cluster of nodes. HDFS divides the data into blocks and these blocks are stored on nodes present in HDFS cluster. It stores data reliably by creating a replica of each and every block present on the nodes present in the cluster and hence provides fault tolerance facility. If node containing data goes down, then a user can easily access that data from the other nodes which contain a copy of same data in the HDFS cluster. HDFS by default creates 3 copies of blocks containing data present in the nodes in HDFS cluster. Hence data is quickly available to the users and hence user does not face the problem of data loss. Hence HDFS is highly reliable.

5. High Availability

Issues in legacy systems

- Data unavailable due to the crashing of a machine.

- Users have to wait for a long period of time to access their data, sometimes users have to wait for a particular period of time till the website becomes up.

- Due to unavailability of data, completion of many major projects at organizations gets extended for a long period of time and hence companies have to go through critical situations.

- Limited features and functionalities.

High Availability achieved in Hadoop

HDFS is a highly available file system, data gets replicated among the nodes in the HDFS cluster by creating a replica of the blocks on the other slaves present in HDFS cluster. Hence whenever a user wants to access his data, they can access their data from the slaves which contains its blocks and which is available on the nearest node in the cluster. And during unfavorable situations like a failure of a node, a user can easily access their data from the other nodes. Because duplicate copies of blocks which contain user data are created on the other nodes present in the HDFS cluster.

One simple Example

HDFS provides High availability of data. Whenever user requests for data access to the NameNode, then the NameNode searches for all the nodes in which that data is available. And then provides access to that data to the user from the node in which data was quickly available. While searching for data on all the nodes in the cluster, if NameNode finds some node to be dead, then without user knowledge NameNode redirects the user to the other node in which the same data is available. Without any interruption, data is made available to the user. So, in conditions of node failure also data is highly available to the users. Also, applications do not get affected because of any individual nodes failure.

6. Scalability

As HDFS stores data on multiple nodes in the cluster, when requirements increase we can scale the cluster. There is two scalability mechanisms available: Vertical scalability – add more resources (CPU, Memory, Disk) on the existing nodes of the cluster. Another way is horizontal scalability – Add more machines in the cluster. The horizontal way is preferred as we can scale the cluster from 10s of nodes to 100s of nodes on the fly without any downtime.

7. Economic

Apache Hadoop is not very expensive as it runs on a cluster of commodity hardware. We do not need any specialized machine for it. Hadoop provides huge cost saving also as it is very easy to add more nodes on the fly here. So, if requirement increases, you can increase nodes as well without any downtime and without requiring much of pre-planning.

8. Easy to use

No need of client to deal with distributed computing, the framework takes care of all the things. So, it is easy to use.

9. Data Locality

Hadoop works on data locality principle which states that move computation to data instead of data to computation. When a client submits the MapReduce algorithm, this algorithm is moved to data in the cluster rather than bringing data to the location where the algorithm is submitted and then processing it.

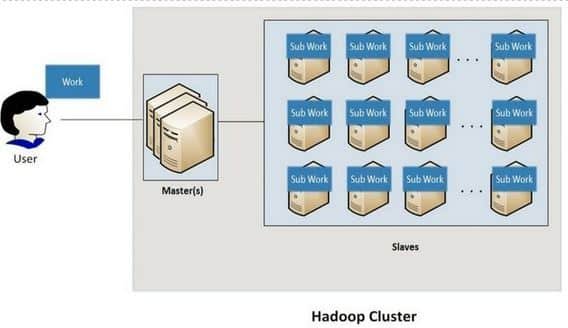

Basic Hadoop Architecture

Hadoop works a master and slave fashion. The name suggests master doesn’t do any work. It takes works from slave, it assigns work to the slave. Usually there are few masters like 2 to 3 masters and 100′ or 1000’s of slaves. All the slave’s machines work independently.

Why multiple masters?

Multiple masters are there for fail over. If one master goes down then and master will take over. So, if master down then your Hadoop cluster will available (High availability).

Introduction to HDFS

- HDFS has been accepted as world’s most reliable storage layer.

- Distributed file system designed to run commodity hardware.

- Highly fault tolerant, distributed, reliable, scalable file system for data storage

- Developed to handle huge volumes of data (large files size, GBs to TBs)

- A file is split up into blocks (default 64GB) and stored distributed across multiple machines

- Stores multiple copies of data on different nodes.

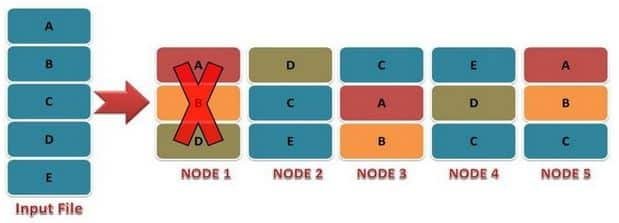

Data Storage in HDFS

Whenever any file has to be written in HDFS, it is broken into small pieces of data known as blocks. HDFS has a default block size of 64MB (Hadoop 1.x) or 128 MB (Hadoop 2.x) which can be increased as per the requirements. These blocks are stored in the cluster in distributed manner on different nodes. This provides a mechanism for MapReduce to process the data in parallel in the cluster.

Multiple copies of each block are stored across the cluster on different nodes. This is a replication of data. By default, HDFS has a replication factor of 3. It provides fault tolerance, reliability, and high availability.

A Large file is split into n number of small blocks. These blocks are stored at different nodes in the cluster in a distributed manner. Each block is replicated and stored across different nodes in the cluster.

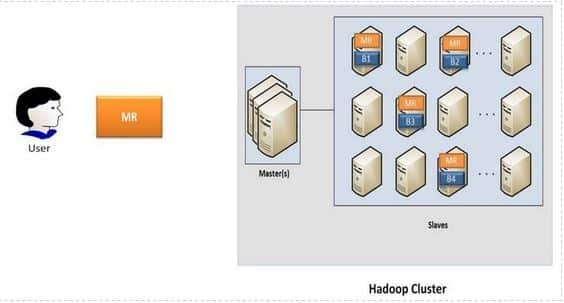

What is Map-Reduce

- Programming model designed for processing large volumes of data in parallel by dividing the work into a set of independent tasks.

- Heart of Hadoop, it moves computation close to the data. This programming paradigm allows for massive scalability across hundreds of thousands of servers in Hadoop cluster.

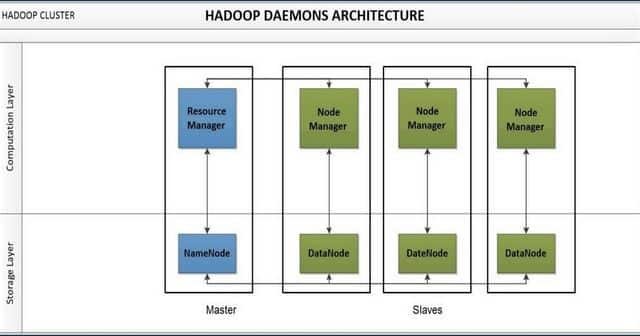

What is YARN

YARN (Yet Another Resource Negotiator) is the resource management layer of Hadoop. In the multi-node cluster, as it becomes very complex to manage/allocate/release the resources (CPU, memory, disk). Hadoop Yarn manages the resources quite efficiently. It allocates the same on request from any application.

On the Master Node, the ResourceManager daemon runs for the YARN then for all the slave nodes NodeManager daemon runs.

How Hadoop works

Step 1: Input data is broken into blocks of size 64MB or 128MB and then blocks are moved to different nodes.

Step 2: Once all the blocks of the are stored on data-nodes, user can process the data.

Step 3: Master, then schedules the program (submitted by user) on individual nodes.

Step 4: Once all the nodes process the data, output is written back on HDFS

Hadoop Daemons

Daemons are the processes that run in the background. There are mainly 4 daemons which run for Hadoop.

1. NameNode – It runs on master node for HDFS.

2. DataNode – It runs on slave nodes for HDFS.

3. ResourceManager – It runs on master node for Yarn.

4. NodeManager – It runs on slave node for Yarn.

These 4 demons run for Hadoop to be functional. Apart from this, there can be Secondary NameNode, standby NameNode, Job HistoryServer, etc.